This paper introduces the AI Data Community—a privacy-preserving ecosystem that empowers small and medium-sized enterprises (SMEs) to securely share data and collaboratively develop AI-driven solutions. (Diagram: courtesy of the Purdue ManuFuture Today team.)

- The AI Data Community (AI-DC) is a novel framework that enables small and medium-sized enterprises (SMEs) to safely participate in various data commons configurations and co-develop AI tools, overcoming limits in data access, expertise, and privacy.

- Built on the MyDataCan privacy infrastructure, AI-DCs let SMEs control and combine their data using integrated privacy-preserving technologies while enabling collective analytics.

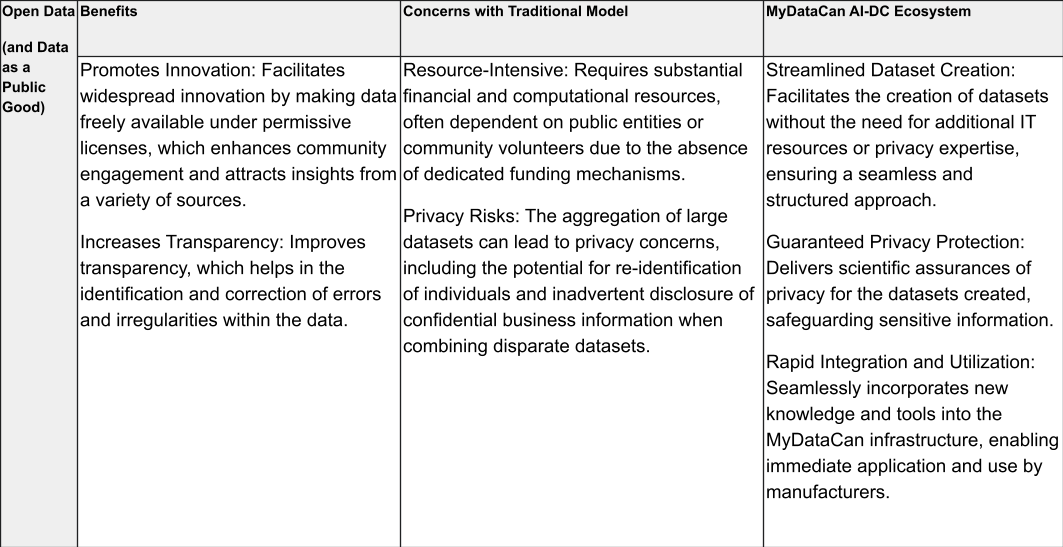

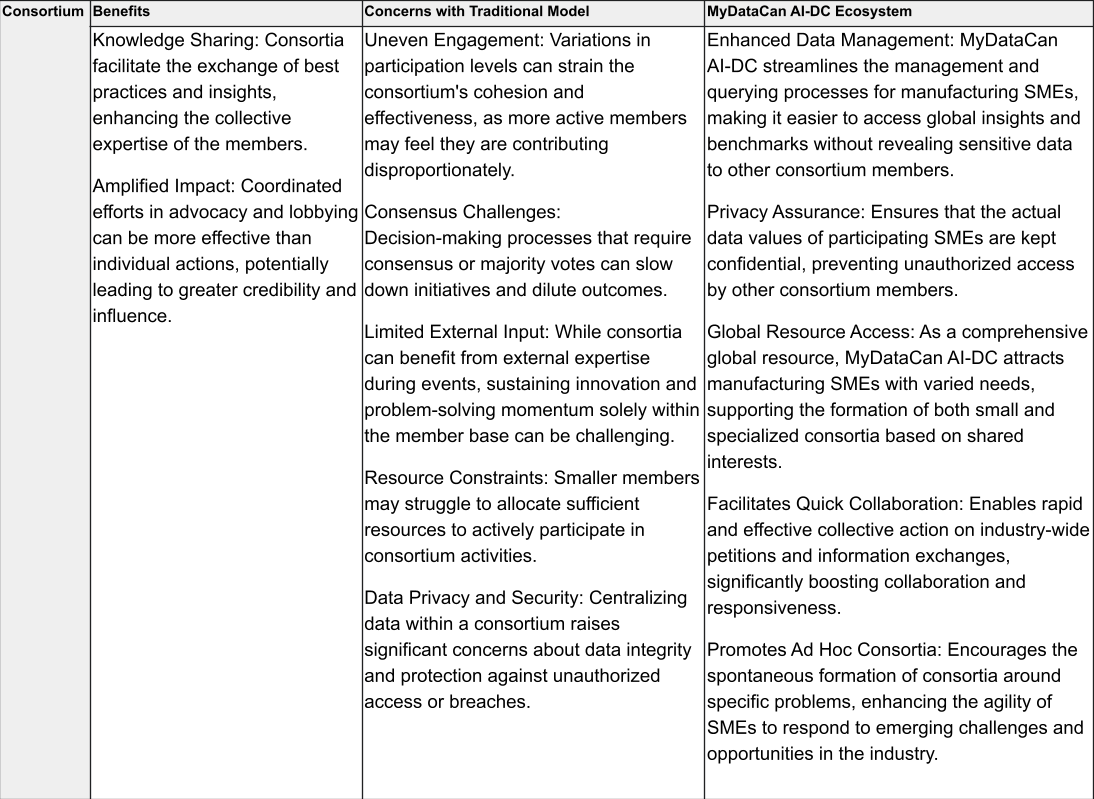

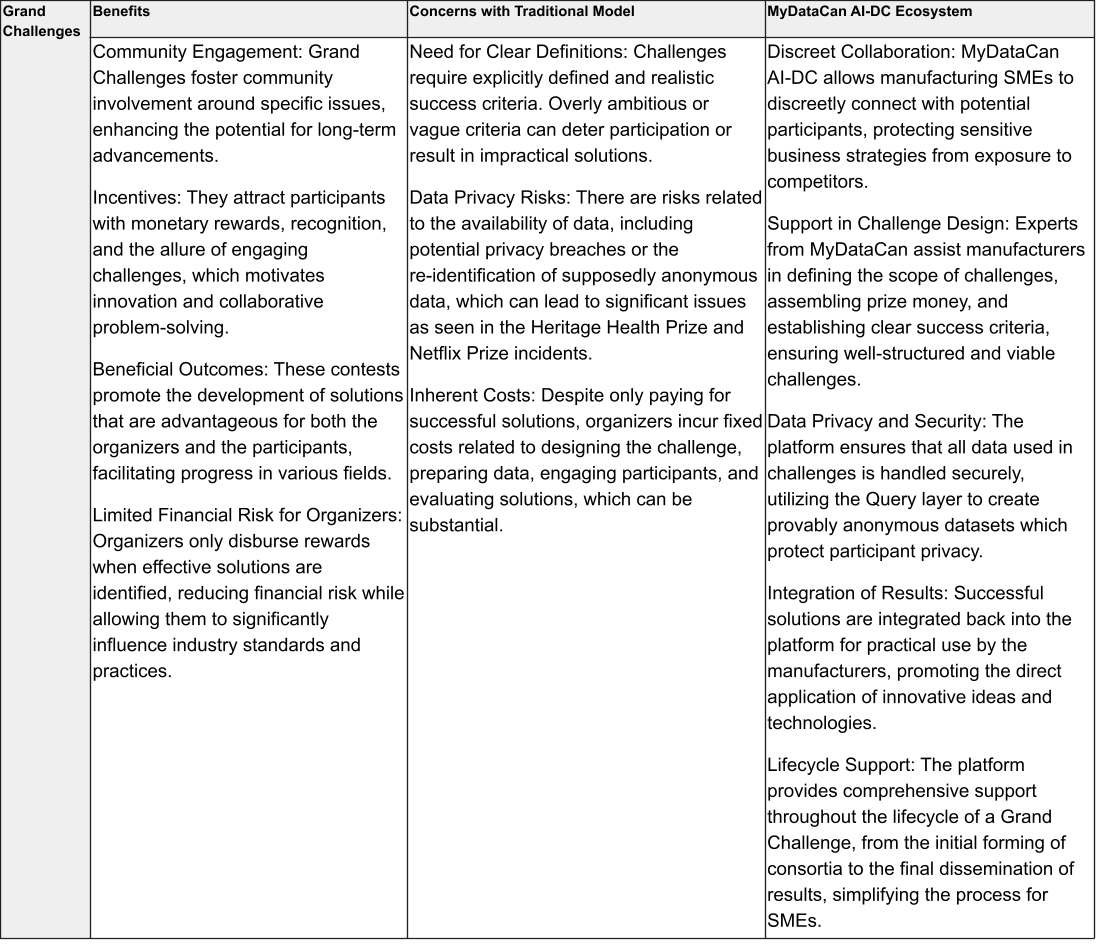

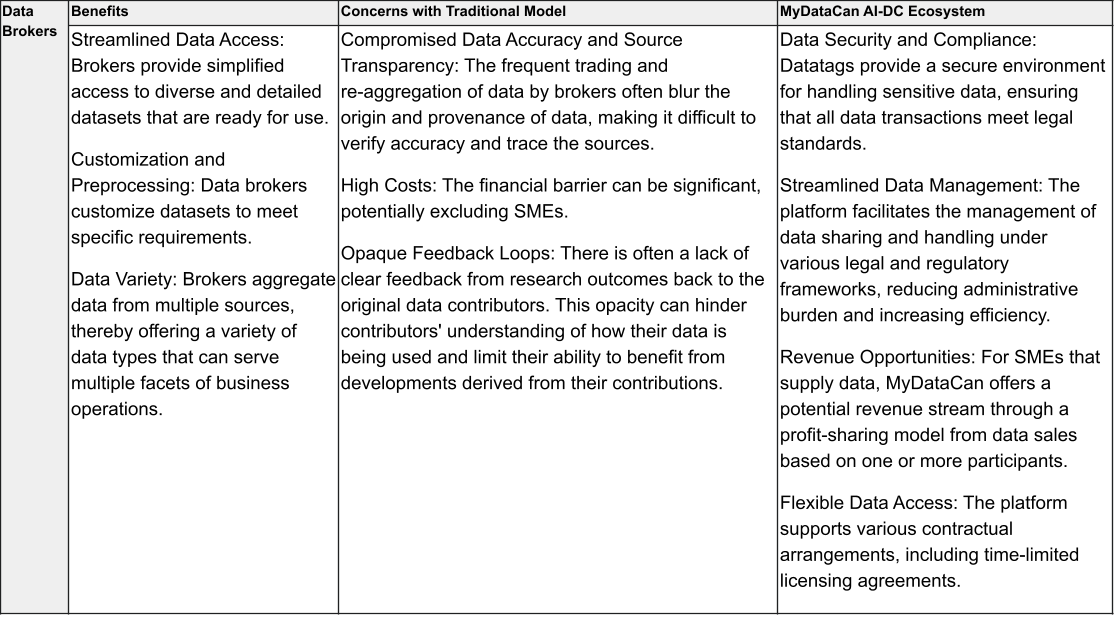

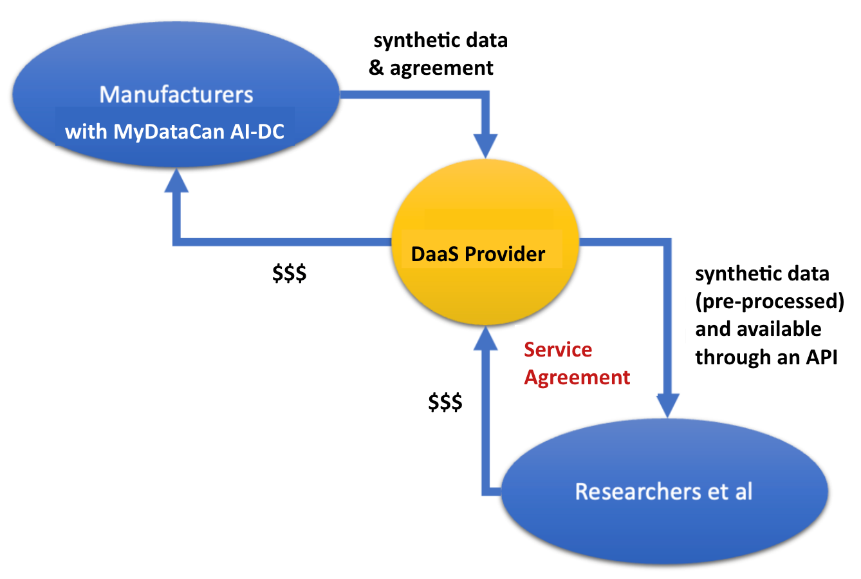

- Standard data commons models are enhanced when executed within an AI-DC, offering consortium benefits like cost-sharing (in SaaS and DaaS), revenue opportunities (via Data Brokers), and safe participation in open innovation efforts (like Grand Challenges), while solving traditional limitations related to privacy, and scalability.

Abstract

Small and medium-sized enterprises (SMEs) often lack access to the extensive data and AI expertise needed to fully benefit from artificial intelligence. While data commons offer a pathway for pooling data and resources, traditional models fail to provide the privacy, confidentiality, and operational assurances required for SME participation. This paper introduces the AI Data Community (AI-DC), a novel framework that enables SMEs to safely and effectively participate in multiple data commons models while co-creating tailored AI innovations. Built on the MyDataCan privacy infrastructure, the AI-DC ecosystem fosters secure collaboration between manufacturers, researchers, and commercial providers—connecting real-world problems with cutting-edge solutions while safeguarding sensitive data.

Results summary:

Compared to traditional data commons: (1) In Open Data models, the AI-DC ensures scientific privacy guarantees in dataset creation; (2) In Consortia, it facilitates rapid collaboration without exposing raw data; (3) In Grand Challenges, it supports privacy-preserving data sharing and integration of outcomes; (4) In Data Broker contexts, it streamlines legal compliance and enables secure, transparent data monetization; (5) In Data as a Service (DaaS), it allows SMEs to share access costs while enabling privacy-preserving reuse; and (6) In Software as a Service (SaaS), it supports the collaborative development of custom AI tools. Across all models, the AI-DC lowers barriers, strengthens privacy, and expands access to innovation for SMEs.

Introduction

The need for an AI data commons

Society is currently in the midst of a technological revolution in artificial intelligence (AI) driven by massive amounts of data. Left behind are organizations that lack the volumes of data and the know-how needed to train AI tools to improve, expand, and rethink their operations. This data and skills gap creates a competitive disadvantage for many organizations—especially small and medium-sized enterprises (SMEs)—limiting their ability to remain competitive and to address their (and society’s) most pressing challenges. Many of these challenges are too complex and multifaceted for any single SME to address alone. To develop meaningful solutions, organizations of all sizes, industries, and structures must collaborate and pool their resources—especially their data—through collective efforts having privacy guarantees.

AI algorithms rely on large datasets to accurately identify patterns and relationships within the data [1]. The companies that currently can gain the most benefit from AI are larger enterprises with access to extensive internal datasets, skilled analysts, and additional data sources through existing vertical and horizontal integrations. When training datasets are too small, the correlations detected by the algorithm may be unreliable, stemming from chance or random fluctuations rather than meaningful statistical co-occurrences. Effectively training an AI algorithm typically requires more data than a single SME can access or procure.

To fully leverage the potential of AI, SMEs must establish formal collaborative relations with other stakeholders. These partnerships should enable data sharing, analysis, and joint solution development, involving not only collaborators within the supply chain but even competitors.

A “data commons” is a shared infrastructure designed for storing, accessing, and analyzing large datasets. It enables the integration and sharing of data from diverse sources, fostering collaboration and supporting decision-making across various fields, including healthcare, environmental science, and genomics.

But data sharing across organizational and sectoral boundaries requires safeguarding the privacy of employees, clients, collaborators, research subjects, and customers as well as maintaining the confidentiality of business processes and operations. We define this blend of communal resources, responsible relationships, and shared infrastructure with strong privacy and confidentiality safeguards as a data community—an information ecosystem where multiple stakeholders with diverse goals and resources leverage a curated pool of data and privacy-preserving tools for mutual benefit.

For a data community to succeed, it must:

- Facilitate data sharing, collaboration, and innovation among stakeholders with differing objectives;

- Address concerns around data privacy and confidentiality; and

- Provide appropriate incentives to ensure sustained stakeholder engagement.

In essence, a data community integrates a data commons within a broader ecosystem, characterized by interconnected incentives and robust privacy and confidentiality guarantees, fostering participation, productivity, and long-term sustainability.

This paper introduces the concept of data communities and a specialized type designed to democratize the power of AI, which we term an AI Data Community (AI-DC). We highlight why traditional models for establishing data commons are inadequate on their own for AI applications and aim to bring greater definitional clarity to a field where key terms are often used inconsistently, ambiguously, or with overlapping meanings.

The paper explores the principles of data commons and uses AI in manufacturing—focusing specifically on SMEs—as a case study to illustrate the strengths and limitations of various data commons models.

AI and Collaboration Among Manufacturing SMEs

Manufacturing exemplifies a sector with significant potential for collaborative partnerships that can yield mutual benefits and innovations [2]. Traditionally, relationships in manufacturing were structured linearly, with one-to-one supplier-buyer contracts forming the backbone of operations. However, companies are increasingly engaging in broader collaborations that involve actors from adjacent industries [3].

A notable example is the Open Automobile Alliance, launched in 2015, which unites companies from diverse sectors to “bring the Android platform to cars.” Its international membership includes automotive giants like Audi, Ford, and Toyota, alongside technology leaders such as Google, Garmin, and LG [4].

Partnerships may be even more essential for the approximately 74 percent of U.S. manufacturing firms that have fewer than 20 employees [5]. For manufacturing SMEs, adopting AI through data-sharing partnerships with other manufacturers, suppliers, and researchers can unlock significant benefits, such as enhanced efficiency, reduced costs, improved quality, and minimized downtime. Applications include data-driven predictive maintenance of machinery as well as demand and inventory forecasting.

However, owing to their small size and limited resources, many SMEs are either unaware of AI’s potential or lack the data, expertise, or access to a trustworthy infrastructure needed to engage in collaborative opportunities effectively [6] [7] [8] [9].

Some countries have started initiatives to provide data sharing infrastructures for their enterprises, including SMEs [10]. For example, a European initiative spearheaded by Germany and France, called Gaia-X, seeks to “create a federated open data infrastructure based on European values regarding data and cloud sovereignty [11].”

One of Gaia-X’s primary goals is to establish "data spaces" focused on key areas such as energy, health, and manufacturing [12] [13]. Within these data spaces, data can be shared among “trusted partners”—including data providers, users, and intermediaries—while ensuring that each dataset remains stored at its origin and is transferred only when necessary [14] [15]. Although operational data spaces have been developed for agriculture and the automotive industry, and “lighthouse projects” for manufacturing are in progress [16], the initiative has faced significant delays, and several components are not yet fully functional [17].

A significant hurdle is that a data commons, by itself, does not provide SMEs with the full benefits they could achieve. Robust privacy and confidentiality measures are critical to facilitate the sharing of sensitive and valuable data without risking security breaches or losing competitive edges. While non-sensitive data can be freely shared, privacy-preserving mechanisms allow for the use of all types of data—sensitive included—to address complex questions, provide essential metrics, and drive innovations that solve pressing challenges.

Another key limitation of a data commons is that it does not fully provide the services SMEs require to capitalize on AI innovations. Data sharing serves as a means to disseminate information, yet the end goal for SMEs is to address operational challenges effectively. Doing so involves identifying specific problems, acquiring the right data, deriving innovative solutions from this data, and implementing these solutions at a production-quality level, accompanied by the necessary training and support to ensure practical application.

To surmount the substantial challenges that can hinder their growth and survival, SMEs require more than just a data commons; they need a comprehensive data community that integrates all these components. This community should encompass a privacy-preserving data commons within an ecosystem that facilitates continuous cycles of problem identification, solution development, and deployment. Such an integrated approach is crucial for enabling SMEs to fully leverage data-driven innovations effectively.

The AI Data Community Ecosystem

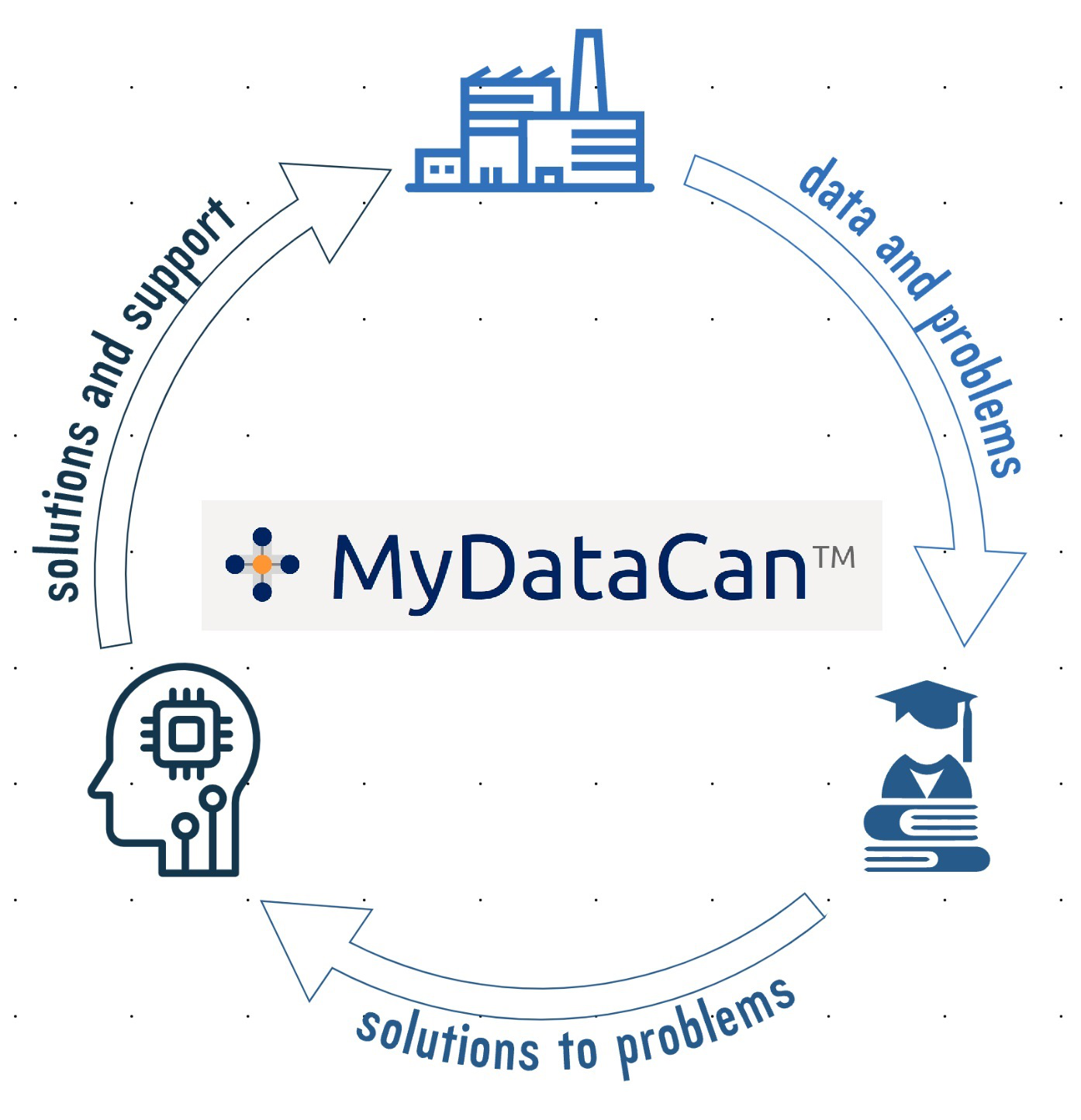

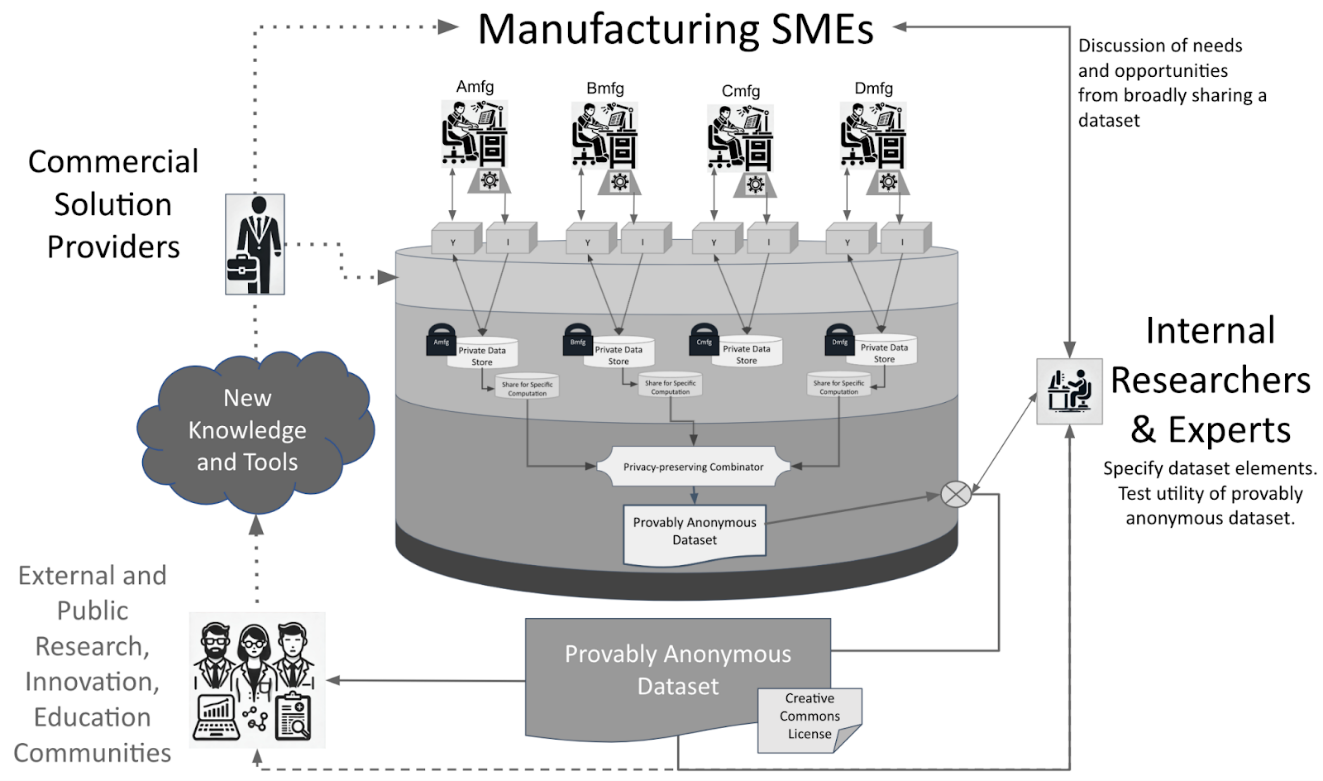

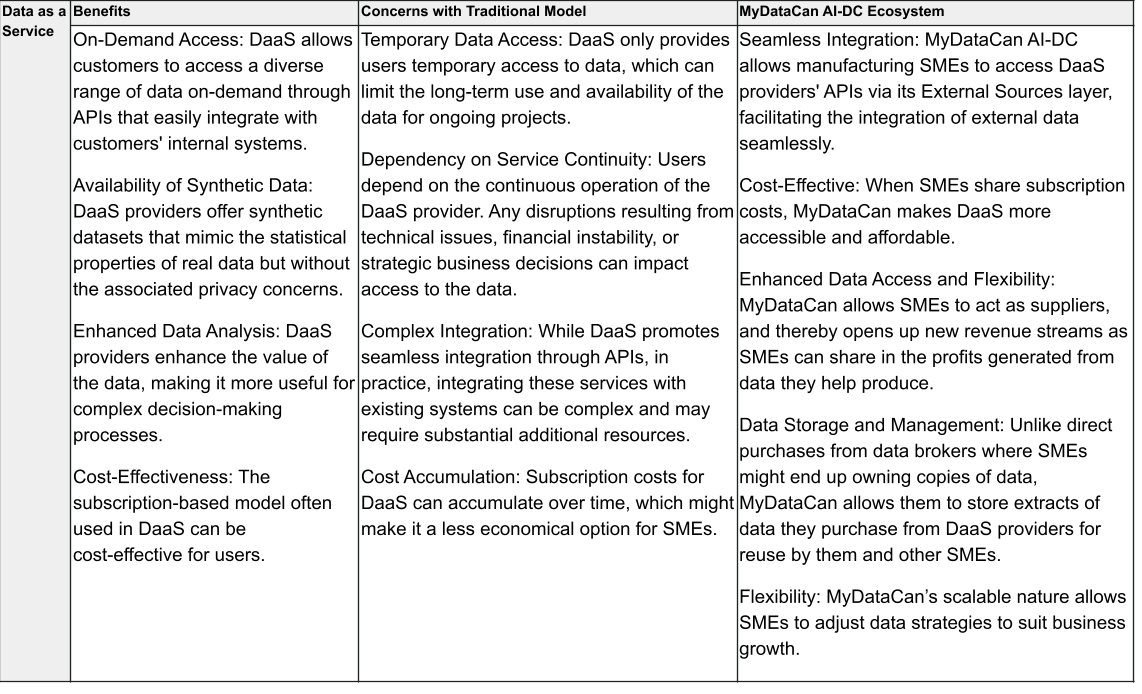

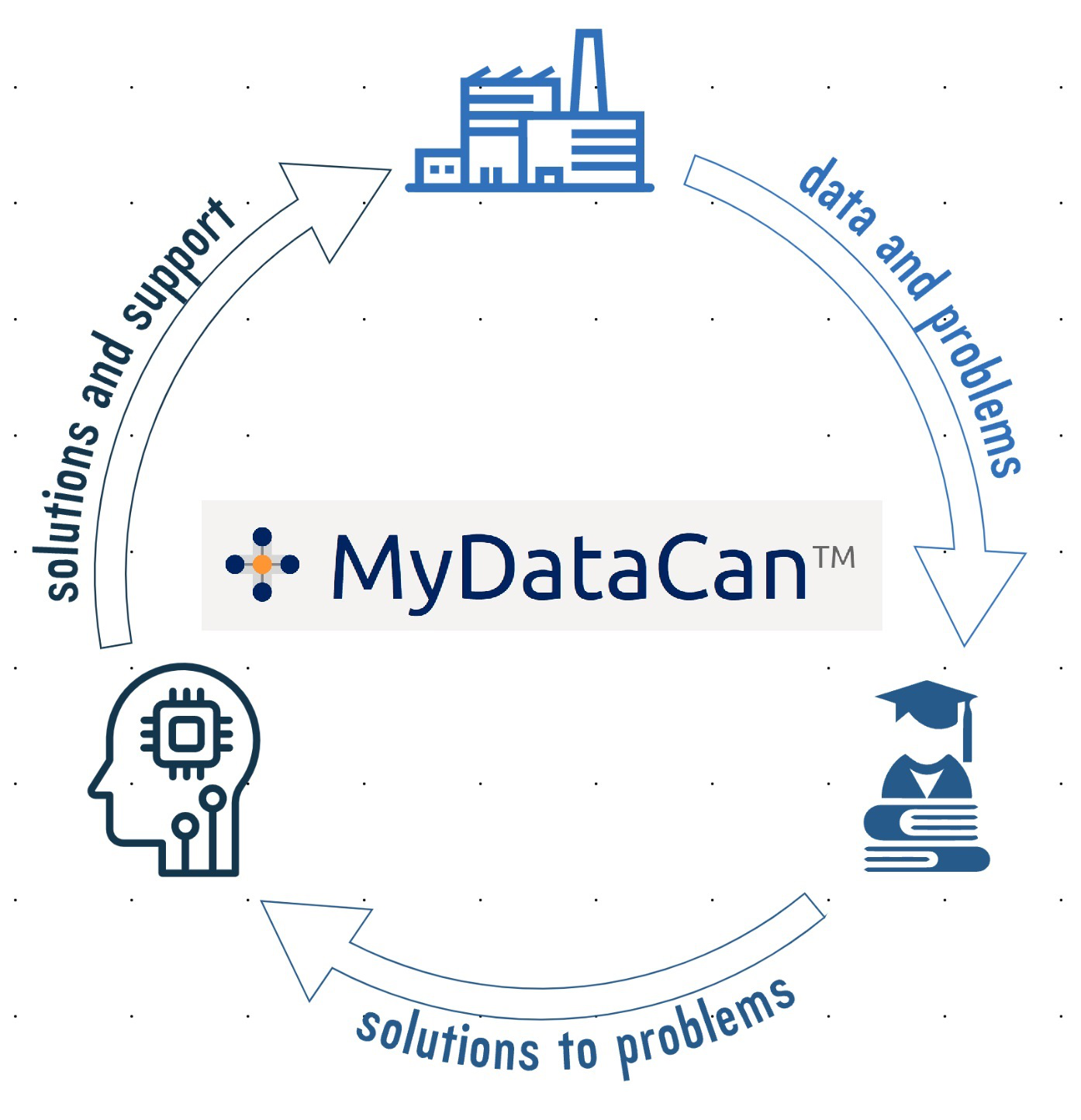

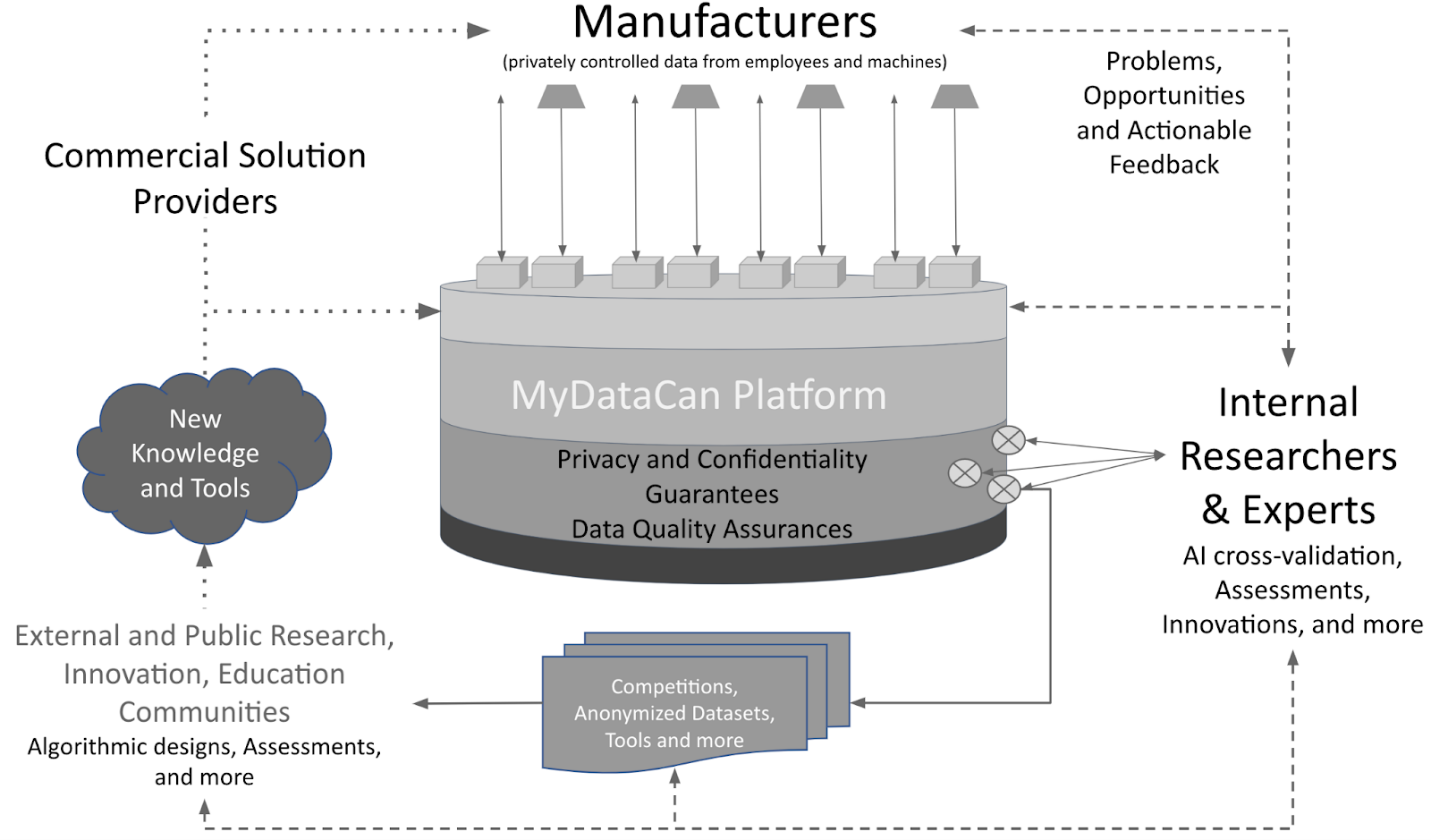

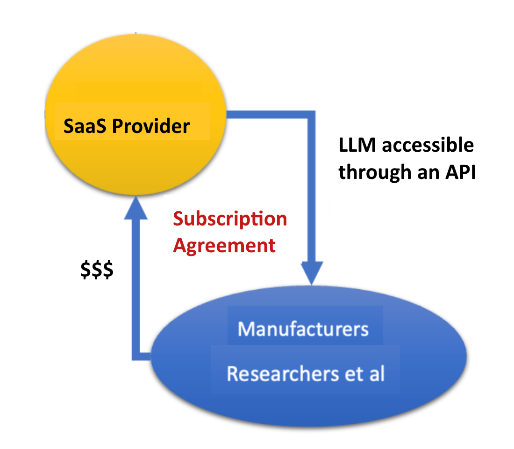

The AI-DC Ecosystem is a cyclical process where SMEs contribute data and specify their challenges. Researchers and experts then analyze provided data to craft solutions, which commercial providers may further refine for real-world application in order to deploy robust solutions with training and support to participating SMEs. This vibrant ecosystem, driven by various types of data commons, supports and enhances this dynamic interchange. Figure 1 illustrates this cycle of innovation and support.

| Figure 1. The AI Data Community (AI-DC) Ecosystem. In this ecosystem, small and medium-sized enterprises (SMEs) supply data and outline their challenges, which are addressed by researchers and experts who develop solutions. Commercial providers subsequently refine these solutions for practical application and then distribute these robust solutions with support and training to the SMEs. The ecosystem is powered by various forms of data commons that facilitate this dynamic exchange of data with guarantees of privacy and confidentiality. (Diagram: courtesy of the Purdue ManuFuture Today team.) |

An AI-DC is designed to address specific challenges faced by SMEs, especially in the manufacturing sector. These SMEs often have access to internal data but not in sufficient quantities to develop AI tools independently. Additionally, while they possess deep domain expertise about their industries and operations, they frequently lack the technical knowledge required to leverage AI effectively. The AI-DC aims to bridge this gap by systematically combining domain and AI expertise to extract valuable insights from existing data. Solutions and derived knowledge are then made accessible to SMEs, accompanied by the necessary training and support for practical implementation within SMEs.

As depicted in Figure 1 and itemized in Figure 2, the AI-DC Ecosystem encompasses three primary participant groups—manufacturers, researchers, and commercial providers—each with distinct needs and contributions. Researchers require access to real-world data and clearly defined problems to develop new algorithms. Manufacturers, often lacking the capabilities to independently pursue AI innovations, gain operational and competitive advantages from technological advances specific to their needs. Commercial providers have meaningful solutions to provide to manufacturers. The AI-DC facilitates this interaction by enabling manufacturers to share their challenges and data with researchers, who in turn create tailored innovations. These innovations are then delivered by commercial providers, creating a cycle of benefit that enhances the entire ecosystem.

Figure 2. An AI Data Community (AI-DC) Ecosystem addresses the pain points of the key stakeholders.

In this writing, “problem-solving” is interpreted in two ways: narrowly, as addressing known and well-defined issues that manufacturers have identified as critical; and more broadly, as “closing the innovation gap.” This involves recognizing previously unnoticed opportunities for improvement and tackling problems previously considered too challenging.

Structure of this paper

The Background section of this document reviews historical examples of data commons. In the Methods section, we introduce a detailed model of an AI-DC, an ecosystem designed to unlock AI benefits for SMEs with privacy and confidentiality guarantees. The Results section then applies this model powered by the real-world infrastructure of MyDataCan to the examples of data commons discussed earlier, demonstrating practical robust applications for such a platform [18]. Finally, the Discussion section evaluates the benefits and lessons learned from these applications, offering insights into future implementations and uses.

Background

A taxonomy of data commons

Numerous stakeholders have adopted the terms “data commons” or “AI commons” to label their initiatives [2]. In this paper, we define a data commons as any collaborative effort that enables stakeholders with diverse goals to share and analyze data, regardless of how these initiatives are otherwise named. We introduce a taxonomy of data commons, categorizing them into seven distinct types: Open Data, Data Consortia, Grand Challenges, Data Brokers, Data as a Service, Software as a Service, and Data as a Public Good. Each category is discussed in detail below. These categories describe the diverse ways in which data commons are established and function, and the benefits and challenges they encounter.

1. Open Data

Open data refers to datasets that are freely accessible, usable, and shareable by anyone with minimal restrictions. This concept emphasizes transparency, accessibility, and collaborative potential, encouraging the use and distribution of data without significant legal, technical, or financial barriers [19] [20]. Open datasets are often provided and maintained by governments, public agencies, and various institutions through platforms designed to enhance public knowledge, support research, facilitate application development, and promote innovation. These portals, or data commons, include both centralized and community-driven open-source ecosystems where data can be modified and enhanced by users globally. Open datasets are pivotal in driving data-driven decision-making and fostering an informed public, and open-data practices support a wide range of uses of data, from academic research to commercial product development and policymaking [21].

Google hosts a project known as Data Commons, which aggregates datasets from various sources into a unified “knowledge graph.” This platform simplifies user access to pre-processed data through a single API, thereby streamlining the typically labor-intensive processes of data cleaning, normalization, and integration [22] [23]. Google has further enhanced the Data Commons by integrating Large Language Models (LLMs), enabling users to interact with a database through natural language queries [24]. As an open-data initiative, Google's Data Commons is designed to be freely accessible, usable, and shareable with minimal restrictions, promoting widespread data utilization and innovation.

Governments and public agencies are often pivotal in providing open data. For example, Data.Gov, managed by the U.S. General Services Administration, serves as a portal for the U.S. Government’s open data, offering over 300,000 datasets from various government levels to support research, application development, data visualization, and more [25]. Globally, the World Bank offers its data through an open portal, adhering to similar minimal restrictions [26].

Open-data portals can be curated by centralized institutions or by communities that form open-source ecosystems. A prime example is Wikidata, a collaboratively edited, multilingual knowledge graph that describes itself as a "free and open knowledge base" accessible and editable by both humans and machines [27]. It serves as a central repository for structured data used on Wikipedia and other sites, containing over 100 million data items with waived copyright, editable by anyone [28] [29]. Contributors can also self-organize into groups of users who work together to improve content related to a particular topic area, called WikiProjects [30].

The term "data commons" is also explicitly used by various open-data repositories managed by other government departments, universities, and federal agencies. These include the U.S. Department of Agriculture’s Ag Data Commons [31], Penn State Data Commons [32], and the National Institute of Health’s Cancer Research Data Commons. The NIH commons includes specialized sub-data commons like the Genomic Data Commons and Imaging Data Commons [33], which host open datasets and others accessible only after NIH review [34]. Additionally, the International Telecommunications Union, a UN agency for digital technology, launched a project to “build a public AI utility” using an open-data model to support the UN Sustainable Development Goals [35].

2. Data Consortia

Organizations have long leveraged consortia to combine resources and expertise toward shared objectives. Industry consortia, which are collaborations among businesses with common goals, offer significant benefits but also come with certain limitations. These consortia are most effective when members share a clear, unified vision or goal. By pooling resources, knowledge, and technology, consortia can drive innovation faster than individual companies could on their own, particularly in areas that are too costly or complex to tackle independently. They also promote standardization across industries, enhancing product and service compatibility and interoperability, which can lead to greater market adoption and increased consumer satisfaction. Moreover, consortia enable networking and partnerships, allowing members to capitalize on each other’s strengths and expand their market presence.

However, these collaborations can face challenges such as conflicts of interest among members, which may slow decision-making and impede the execution of initiatives [36]. Additionally, when major players collaborate, they can unintentionally suppress smaller competitors. The costs associated with consortium membership may also exclude smaller organizations from participating.

In a data-sharing consortium, member organizations gain access to a pool of data broader than their own in order to gain industry or broader perspectives, which often helps them improve their own decision-making or understanding. Usually, insights generated from these pooled datasets are shared with other consortium members and are not widely disseminated [37].

The Association of American Universities Data Exchange (AAUDE) is an example of a traditional data-sharing consortium. With about 70 member institutions—mostly US research universities—the AAUDE is set up to “improve the quality and usability of information about higher education” by sharing data such as salary ranges, employment benefits, student retention and graduation rates, and enrollment numbers, as well as tuition and fees [38] [39] [40]. By gaining access to a broader pool of data, member institutions can make better-informed decisions about their own hiring practices, admissions policies, and financial issues.

The Higher Education Data Sharing Consortium (HEDS) brings together almost 200 educational institutions to facilitate “the exchange of data and professional knowledge among member institutions in an atmosphere of trust, collegiality, and assured confidentiality [36].” HEDS members share data to improve educational practices, foster better learning environments, and enhance organizational performance. Data sharing in the HEDS is voluntary, but, in the spirit of reciprocity, reports on shared data are available only to member institutions that opt to contribute their own [37] [41] [42].

Both the AAUDE and the HEDS emphasize the importance of data-driven decision-making to contribute to the strategic planning and operational effectiveness of their member institutions. By sharing data and insights, these consortia help member organizations benchmark their performance against similar organizations and identify areas for improvement.

Other data consortia go beyond the simple extraction and sharing of insights from pooled data and try to shape the broader frameworks which govern how datasets are generated, shared, and controlled. For instance, the World Wide Web Consortium (W3C) brings together several hundred member organizations from the private sector, the spheres of government and nonprofits, academia, and individual stakeholders to attempt to “lead the Web to its full potential [43].” The W3C pursues this goal primarily by developing technical standards and guidelines for the web that enable a consistent standard of quality and compatibility, as well as attempting to solve system-wide challenges (e.g., online security) [43]. The W3C’s focus is less on data sharing than on the ability to convene members to facilitate the policy, standards, and thought leadership that has dramatically shaped the standards and practices of the World Wide Web.

Launched in 2016, the AI Commons is a nonprofit initiative that collaborates with AI practitioners, entrepreneurs, academia, NGOs, and AI industry organizations. Its mission is "promoting AI for Good and bringing the benefits of AI to everyone and utilizing the technology towards social and economic improvement [44].” This consortium focuses on advocacy efforts and outreach to foster the use of AI as an end in itself, and not primarily on data sharing to solve specific problems.

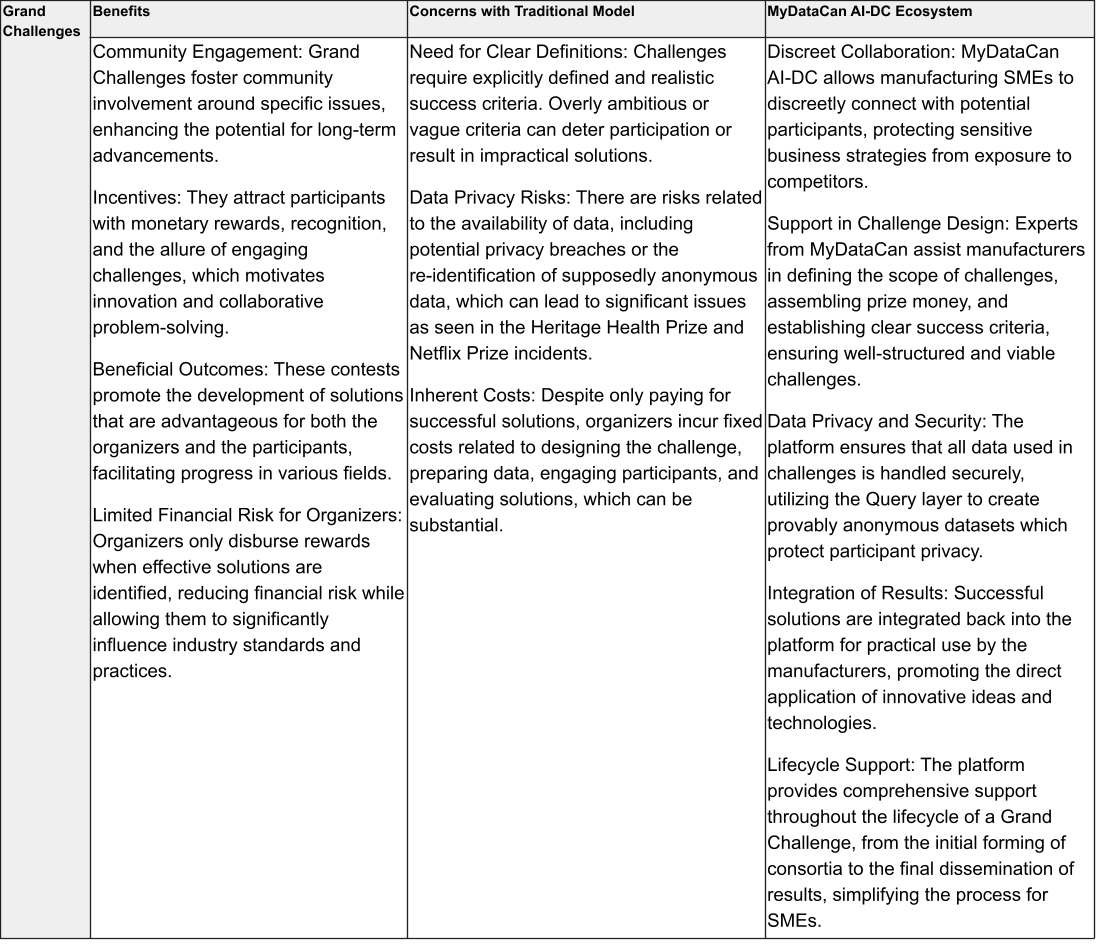

3. Grand Challenges

Grand Challenges are large-scale, incentivized prize competitions that aim to solve complex and significant problems through innovation. These contests mobilize a diverse array of participants, including researchers, entrepreneurs, and developers, to develop solutions in specific fields such as technology, health, and the environment. Grand Challenges provide frameworks for participants to use data and creative approaches to achieve measurable goals, offering substantial rewards, including financial incentives, to spur competition and innovation [45]. The challenges can result in technological advancements, stimulate industry progress, and address societal needs, but they can also bring to light critical issues such as data privacy and the viability of the solutions within practical applications.

In 2006, Netflix launched the “Netflix Prize,” challenging researchers to develop a recommendation algorithm that performed at least 10 percent better than the company’s existing system at predicting whether a user would like a particular film [46] [47]. The research was to be based on aggregated user ratings of other movies, and the rewards for success were various cash prizes, including the US$1 million grand prize [48]. Individuals and teams could register, in their personal capacity or as representatives of companies or academic institutions, and receive access to a dataset containing more than 100 million movie reviews from over 480,000 randomly-chosen Netflix subscribers, whose identities had been redacted [48] [49]. The legal terms of the competition allowed participants to retain ownership of their work but granted Netflix a non-exclusive license for the winning solution [48]. In the end, the grand prize was awarded nearly three years after the competition was initiated [50] [51]. Netflix never actually implemented the final winning algorithm, because doing so would have required too much engineering effort, and the company’s business model had changed significantly during the intervening years, including evolving from DVD rental to streaming [52].

As part of the competition, Netflix released what it wrongly believed to be anonymized subscriber viewing lists to help contestants improve content recommendations. Researchers discovered that individuals could be re-identified from their Netflix reviews, which often mirrored reviews they posted on another site where authorship was known. Despite these findings, which were later published, Netflix proceeded to announce a second competition in August 2009 with even more detailed data [53]. This prompted the Federal Trade Commission to investigate and publicly express privacy concerns [54]. Additionally, a lawsuit was filed by a closeted lesbian mother and others who claimed that the dataset from the previous contest was insufficiently anonymized and could expose individuals' identities, based on their movie rental history [55]. Netflix ultimately canceled the second contest as part of a settlement of the lawsuit and agreement to end the Federal Trade Commission investigation [56].

Since the Netflix Challenge, the organization of data-driven prize competitions has been significantly streamlined by Kaggle, a Google subsidiary. Kaggle hosts machine learning competitions that address a wide range of objectives, including solving commercial issues, fostering professional development, and advancing research [57]. In 2011, the Heritage Provider Network, one of the largest physician-led care organizations in the U.S., initiated the Heritage Health Prize Competition through Kaggle, offering a US$3 million grand prize. The competition aimed to develop an algorithm that could predict the number of days a patient would likely spend in the hospital the following year, with the ultimate goal of reducing unnecessary hospitalizations—a problem estimated to have cost more than $30 billion in the U.S. in 2006 [58]. Registered participants received access to de-identified data collected during a 48-month period [59]. The data included details on member demographics (such as sex and age at first claim), claims made (such as diagnosis codes and health care provider IDs), lab tests ordered, and prescriptions filled [60]. The competition ran for two years. No prediction algorithm reached the specified accuracy threshold by the end of the contest, and so only a US$500,000 prize was awarded [61].

Not only did the Heritage Prize fail to achieve the utility desired, it also harbored a grave privacy concern. When the competition data became available [62], co-author Latanya Sweeney and her students from an undergraduate class at Harvard developed strategies to re-identify individuals from the health information included in the dataset. Recognizing a critical learning opportunity, Professor Sweeney contacted the competition's privacy advisor to discuss using this incident to highlight the need for robust scientific methods in data anonymization, which she argued were evidently lacking in the competition’s dataset. She proposed that the sponsors review the accuracy of the re-identified results to confirm the findings of her and her students, but was rebuffed by the researcher responsible for de-identifying the data for the competition organizers (K. El Emam, personal correspondence, November 2013).

In 2017, Zillow, an American tech real estate marketplace, launched the Zillow Prize, a Grand Challenge with a US$1 million grand prize aimed at enhancing the accuracy of its proprietary home valuation tool, the Zestimate. This algorithm calculates home values based on publicly available data, including comparable home sales in the vicinity of the property in question. For the competition, Zillow provided participants with detailed real estate data from three U.S. counties for the year 2016, covering all related transactions within that time frame [63] [64]. The challenge attracted over 3,800 teams from 91 countries, culminating in 2019 with the awarding of the full US$1 million prize. The winning team significantly improved the Zestimate's accuracy, surpassing Zillow’s initial expectations and outperforming the existing benchmark model by approximately 13 percent [65].

During the course of the Zillow Prize challenge, Zillow introduced Zillow Offers, a service that utilized its technology platform to provide instant cash offers to homeowners eager for a quick and hassle-free sale [66]. This model falls under the umbrella of iBuying, where companies leverage algorithms to assess home values, make offers, and after potential renovations, resell the properties [67]. Designed to streamline the traditionally slow home-selling process, iBuying aims to reduce the time and complexity involved significantly.

However, by late 2021, Zillow decided to discontinue its iBuying program. The company encountered significant challenges, primarily the inability of its algorithms to price homes accurately, which resulted in financial losses. Additional factors contributing to the cessation included the inherent unpredictability of home prices and the substantial operational costs associated with maintaining a large inventory of properties. This decision cast doubt on the overall effectiveness of the initial challenge and raised questions about the viability of algorithm-driven real estate pricing in the iBuying business model [66].

Grand Challenges have also been used by the U.S. federal government as a means to use taxpayer funds “to pay only for success [68].” In 2010, the heads of US federal agencies were given the authority to carry out prize contests to “stimulate innovation that has the potential to advance the mission of the respective agency [68].” A number of federal agencies, such as the Department of Defense, the Department of Energy, NASA, and the National Science Foundation have initiated these types of prize competitions using their own agency-specific guidelines [68].

Grand Challenges such as the Netflix Prize, Heritage Health Prize, and Zillow Prize illustrate both the promise and pitfalls of data-driven innovation competitions. While these contests can catalyze technological breakthroughs and attract top-tier talent to solve complex problems, they also carry significant risks—particularly related to data privacy—and often fall short of producing actionable, commercially viable outcomes. For instance, the Heritage Prize concluded without awarding a grand prize, the Netflix Prize’s winning solution was never deployed commercially, and Zillow ultimately shut down its iBuying business due in part to the underperformance of its predictive algorithms. These examples underscore that while Grand Challenges can be powerful tools for stimulating innovation, they require rigorous safeguards for data privacy, thoughtful challenge design, and a clear path for translating insights into sustainable business applications.

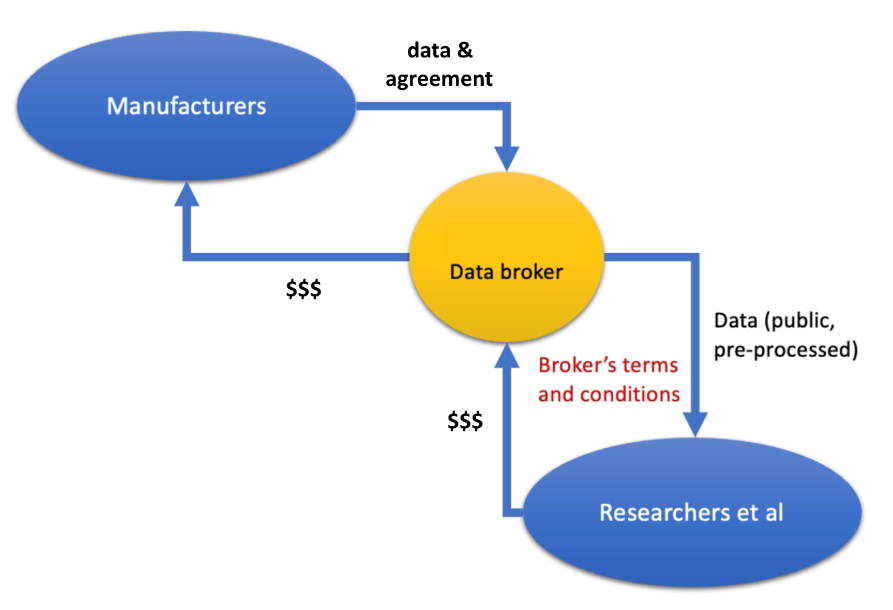

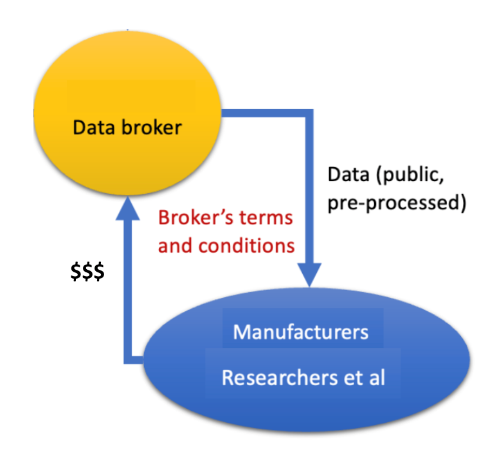

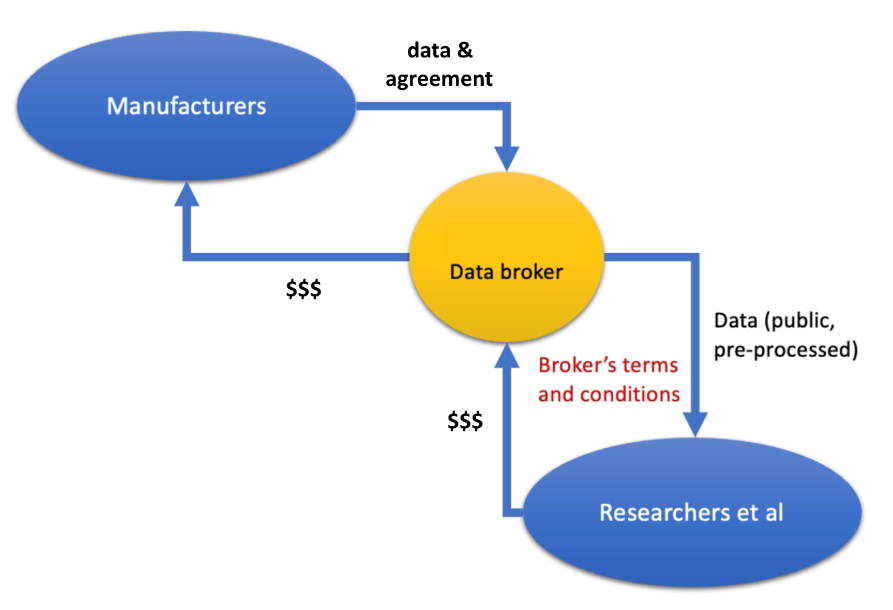

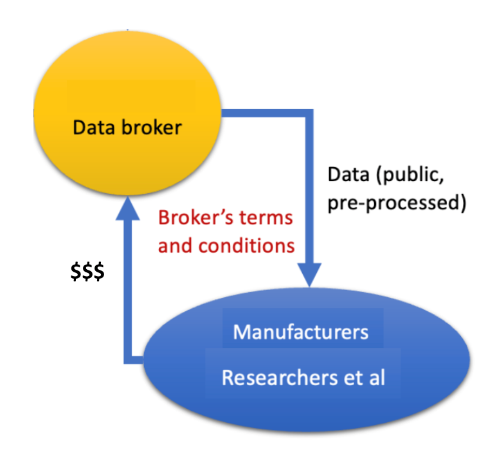

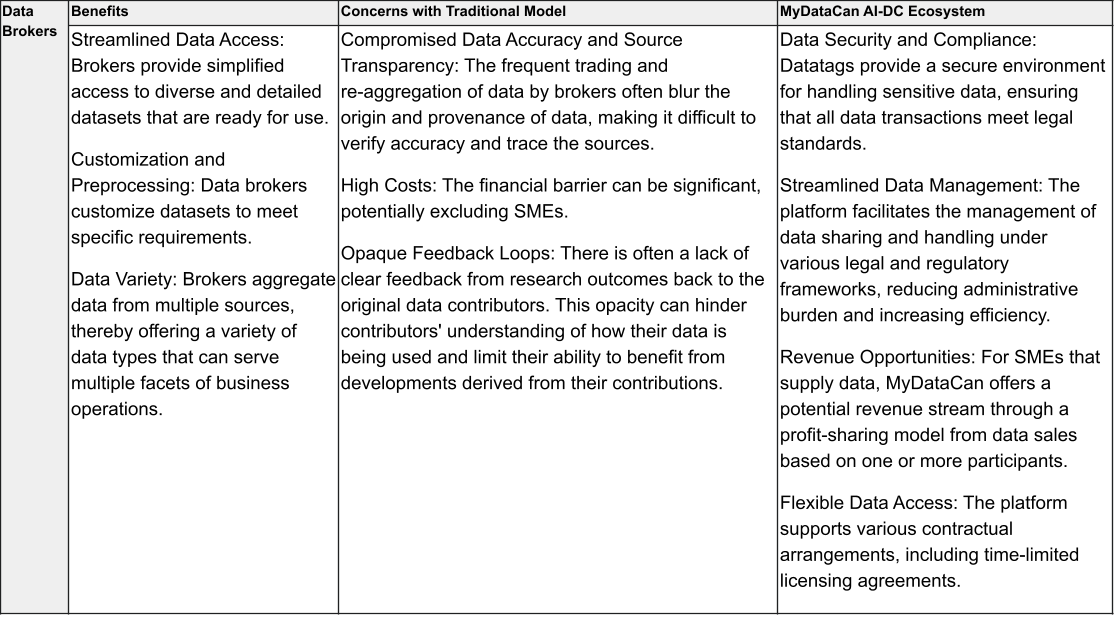

4. Data Brokers

Data brokers are entities that collect, aggregate, and sell data from various sources, operating on a profit model where a dataset is a commodity sold to those who can afford it. Their primary role is to compile data about individuals or businesses, which they then organize and resell to other companies, marketers, or interested parties. The information they deal with can include personal details, consumer behavior, business intelligence, financial data, and more. Data brokers mainly focus on aggregating and selling data and are profit-driven [69].

Personal data can come from a variety of online and offline sources and might include commercial information (such as purchase histories, warranty registrations, and browsing histories), government information (such as voter rolls and bankruptcy filings), and publicly available information (such as content scraped from social media sites). Once collected by a data broker, datasets are typically cleaned and linked to other related data to create more useful and attractive data products for purchasers. Major tech companies like Google and Meta act as data brokers by monetizing user information alongside their primary services [70]. Surveys have documented hundreds of data brokers for personal information [71] [72].

For business information, data brokers analyze and package a range of data including company financials, industry trends, and consumer behavior. This business intelligence is crucial for companies engaged in market research, competitor analysis, lead generation, and strategic planning. Prominent data brokers specializing in business information include:

- Dun & Bradstreet: Offers comprehensive business reports, credit risk assessments, and detailed company profiles [73].

- Experian: Provides business credit reports, marketing services, and data management solutions [74].

- LexisNexis: Delivers legal, regulatory, and business information, including detailed company profiles and industry reports [75].

- Bloomberg: Known for its in-depth business and financial information, including market data, analysis, and news (e.g., [76]).

- Hoovers (part of Dun & Bradstreet): Supplies detailed company reports and industry analyses [77].

These brokers source their data from a mix of public and proprietary sources, ensuring that the information they provide is both reliable and current, thereby supporting informed business decisions.

The breadth of data held by data brokers is, according to their own statements, staggering. As of December 2024, more than 500 million businesses worldwide had a profile on Dun & Bradstreet to identify the company’s creditworthiness [78]. The Conway, Arkansas-based data broker Acxiom advertises that its products allow marketers to “reach over 2.5 billion of the world’s marketable consumers” [79], while its counterpart in Irvine, California, CoreLogic, purports to have property data covering 99.9% of US land parcels [80].

Overall, data brokers play a crucial role by compiling and analyzing a vast array of personal and business information, which aids companies in making well-informed decisions across market research, competitor analysis, lead generation, and strategic planning. These brokers offer valuable insights into credit risk, market dynamics, and financial conditions, and by cleaning and linking disparate data sources, they create comprehensive, user-friendly databases that are readily accessible. They provide access to extensive data covering millions of individuals and businesses worldwide, significantly enhancing the scope and depth of market reach for companies. However, the activities of data brokers are not without disadvantages. Significant privacy concerns arise from the collection, aggregation, and sale of data. The accuracy and relevance of the data can sometimes be compromised by the volume and variety of sources, leading to the circulation of outdated or incorrect information. Additionally, the extensive data held by brokers can be susceptible to misuse. Finally, the high costs associated with accessing premium data services can be prohibitive for smaller entities, potentially giving larger corporations an unfair advantage in accessing and utilizing high-quality business intelligence.

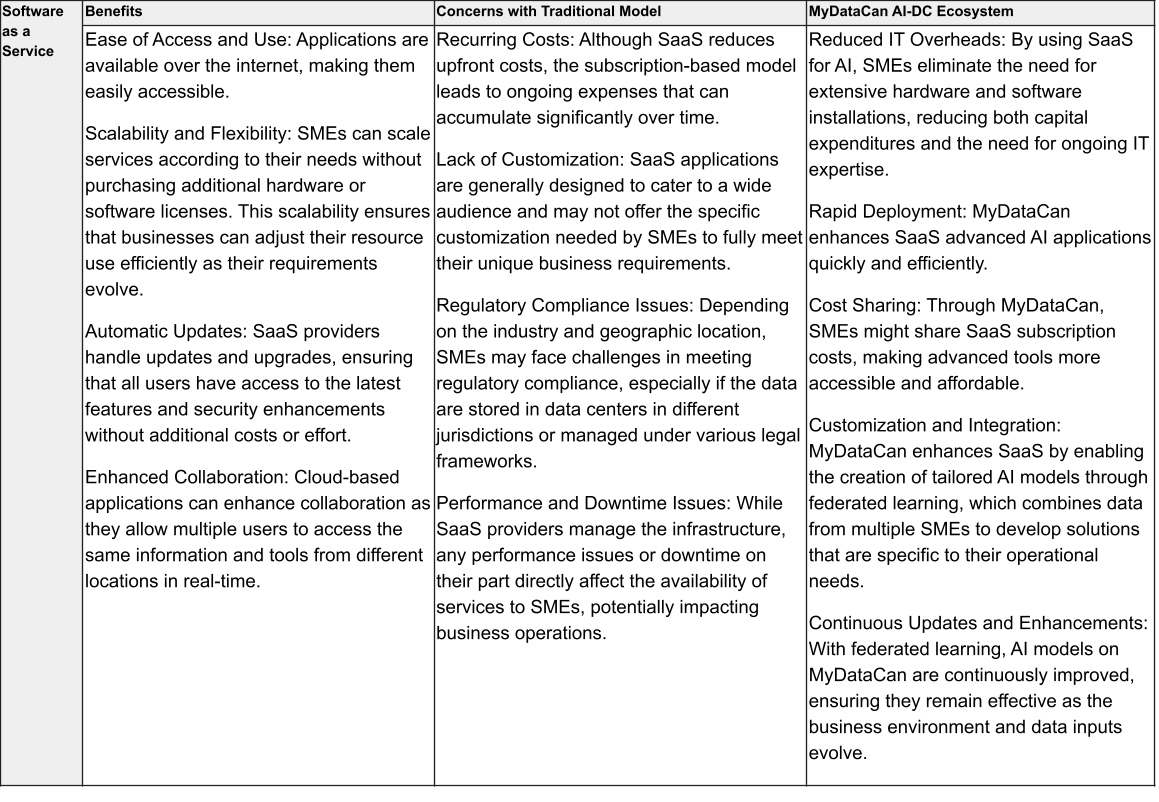

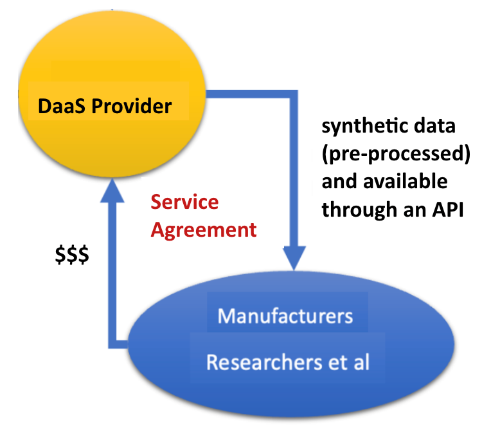

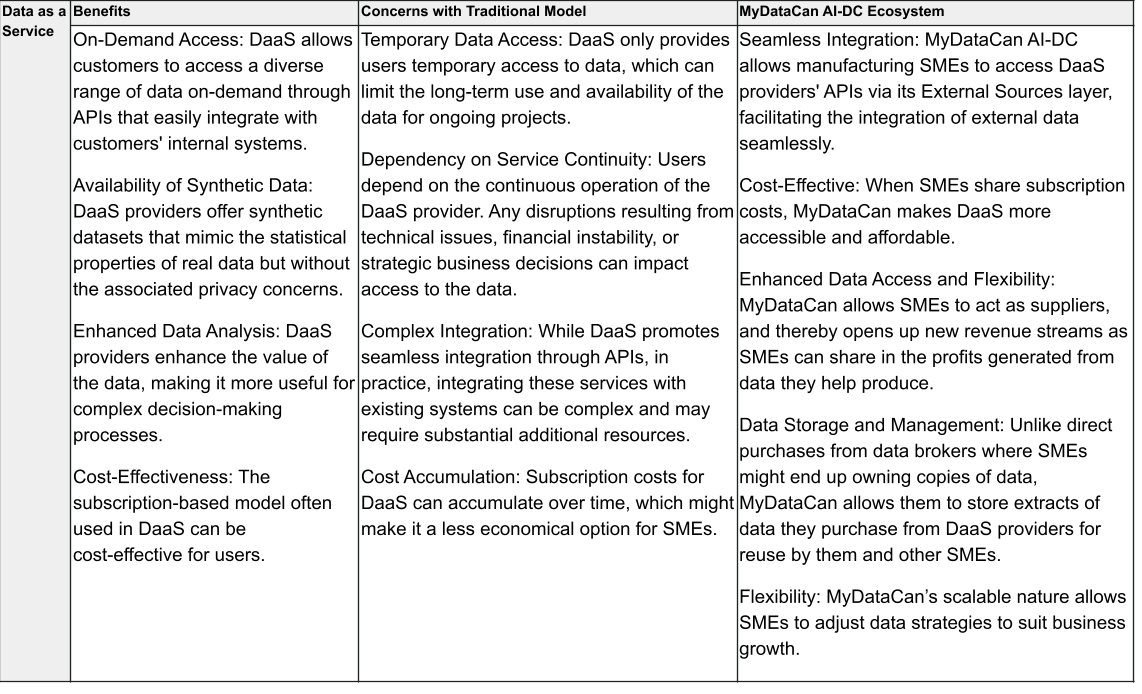

5. Data as a Service

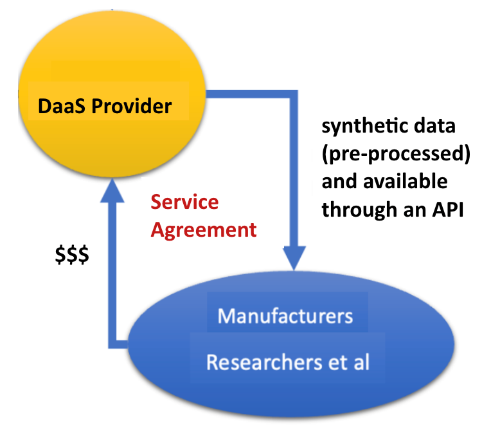

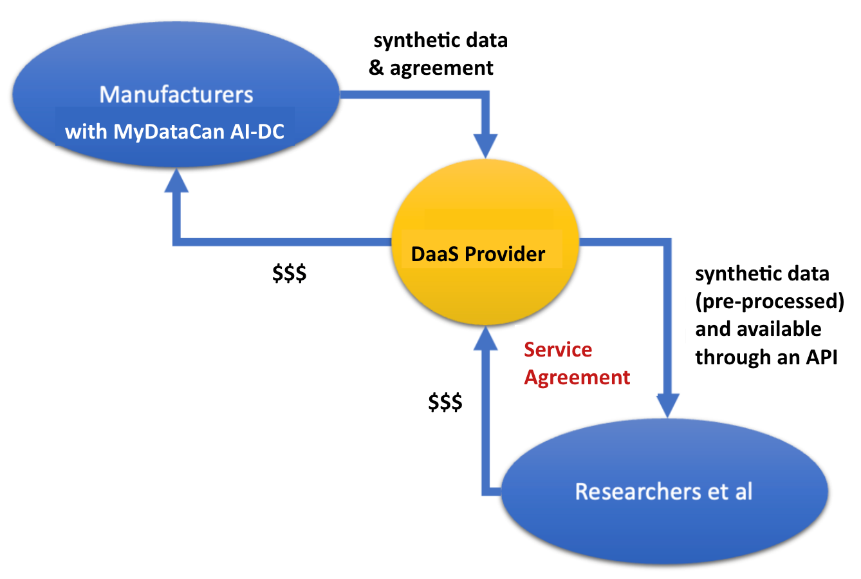

Data as a Service (DaaS) is a cloud-based technology model that provides data over the internet, allowing users to access high-quality information without managing the underlying infrastructure. This model eliminates the need for customers to store data on their own servers or private clouds, saving on costs associated with data management, security, and updates. DaaS offers significant financial flexibility, as companies pay only for the data services they use, when they need them.

DaaS is especially beneficial for data-intensive applications, such as training and validating AI tools, where access to extensive, high-quality datasets is crucial. Users can access data anytime and from anywhere, with the scalability to adjust services based on demand. DaaS providers not only supply data but also seamlessly integrate data into customers' existing IT environments. They provide tools and APIs that enhance the ability to analyze and apply data effectively [81] [82].

In contrast to data brokers, who primarily sell data as a product, DaaS providers typically operate on a subscription or usage-based pricing model. Users pay based on the volume of data they consume or for continuous access to databases. Additionally, while a data broker traditionally sells data, they can also offer data through a DaaS model, combining the benefits of both approaches to meet diverse customer needs.

Some DaaS providers specialize in using computer simulations or algorithms to generate synthetic data, which can have the same aggregate statistical properties as real data but none of the privacy risks [83]. Synthetic data can be tailored to fit detailed customer specifications. For example, facial recognition algorithms that have been trained on insufficiently diverse datasets tend to exhibit less accurate performance when applied to the faces of individuals from groups that were underrepresented in the training data. To counter this, DaaS providers can create bespoke synthetic training datasets that are balanced along racial, ethnic, and gender lines [84].

In summary, Data as a Service (DaaS) provides a cloud-based, cost-effective solution for accessing high-quality data without the infrastructure burden, offering scalable services and financial flexibility ideal for data-intensive tasks like AI training. Additionally, the advent of synthetic data through DaaS addresses privacy concerns and improves algorithm training, offering a potential way to enhance the performance of AI applications across diverse demographic groups. Overall, DaaS represents a transformative approach in the data services industry, promoting innovation and data privacy.

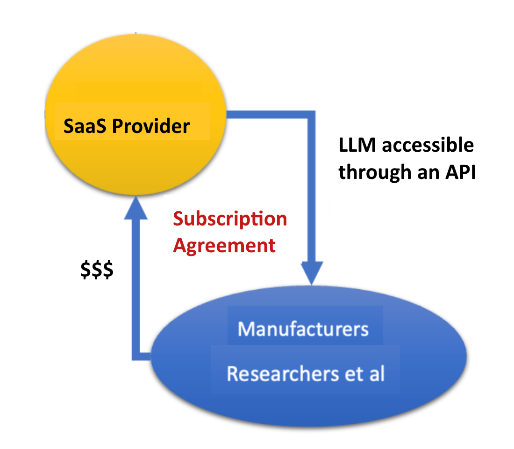

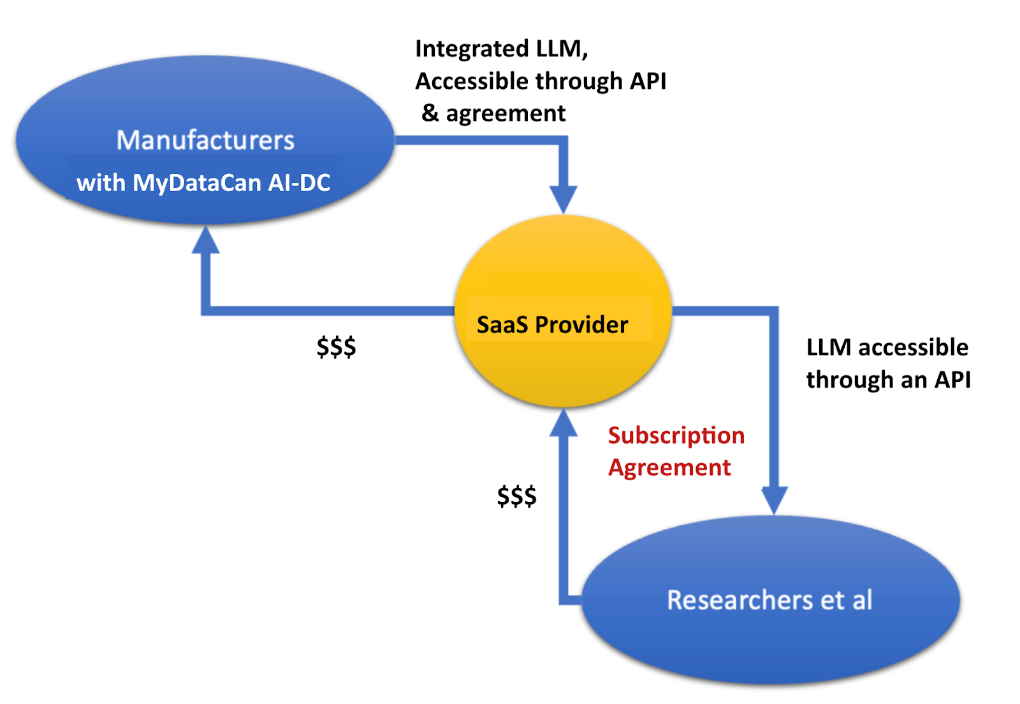

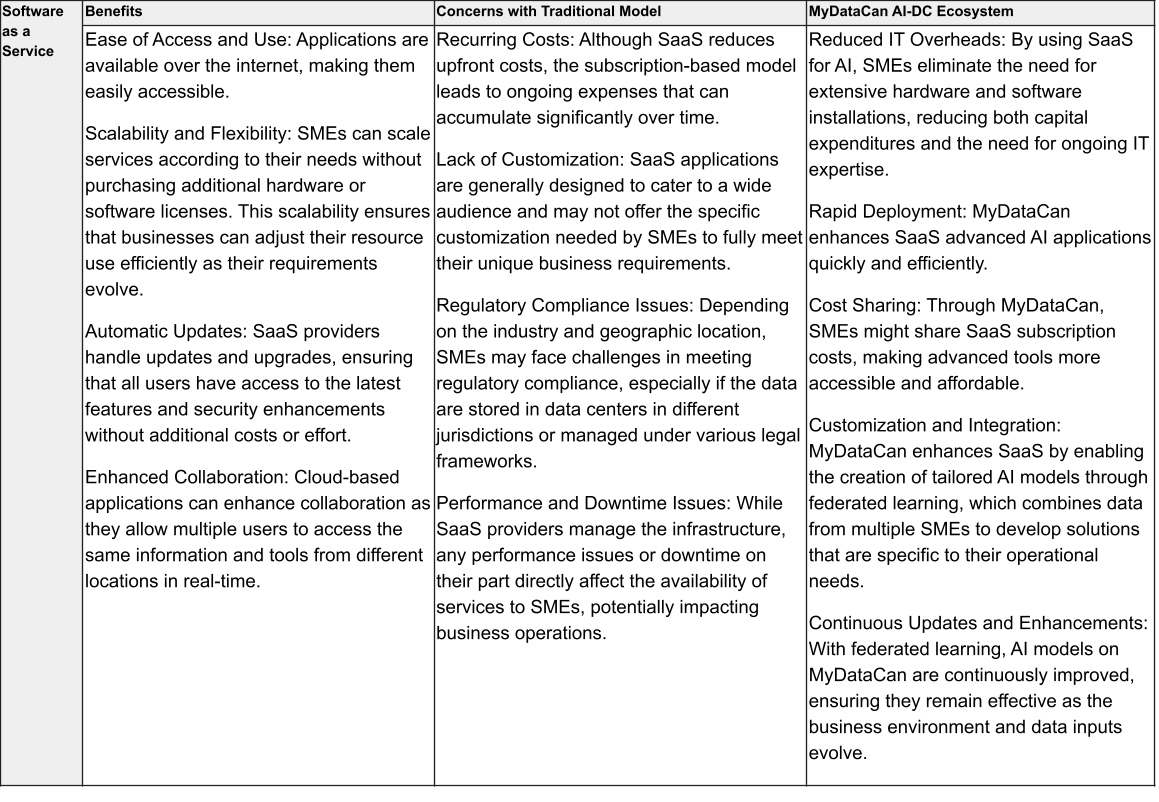

6. Software as a Service

Software as a Service (SaaS) provides access to applications hosted on remote servers. Users can access software applications without the need for internal hardware or high upfront costs. The focus is on software solutions. Providers manage the software application, including maintenance, updates, and security [85]. SaaS is used across various applications, including email, customer relationship management, human resources management, and collaboration tools. It is suitable for businesses that want to reduce IT overhead and easily scale as their software needs change. SaaS providers typically charge a subscription fee based on the number of users and the level of service provided [86].

While both DaaS and SaaS are delivered over the internet and help businesses reduce IT complexity, DaaS provides data that can be integrated into different systems, and SaaS offers ready-to-use software solutions. Both are essential in the modern digital landscape but cater to different organizational needs.

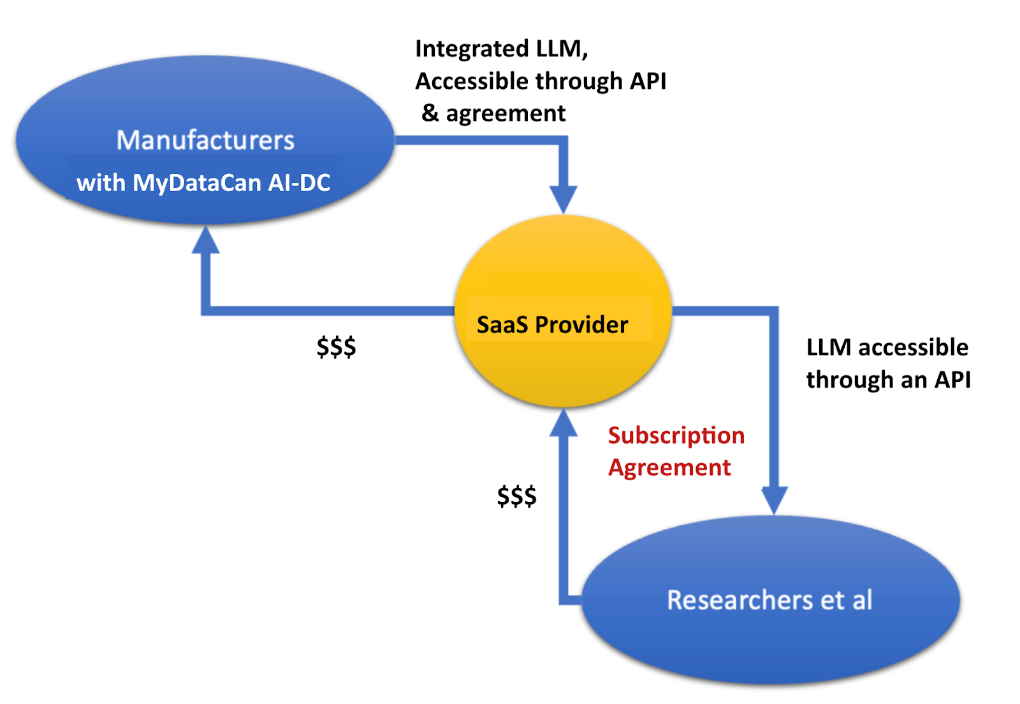

The rise of Large Language Models (LLMs) has transformed Software as a Service (SaaS) offerings into dynamic forms of data commons, as new enterprise tools built on extensive datasets now depend on shared knowledge resources to function effectively. LLMs—advanced AI systems trained on massive corpora of text—use deep learning and neural networks to generate human-like responses, enabling a range of natural language applications including question answering, content summarization, language translation, and conversational interfaces. By predicting text based on contextual cues, LLMs can synthesize and operationalize vast, diverse data inputs. This capability has led to a new class of SaaS products that not only leverage LLMs for utility but also evolve through collective data contributions and feedback loops. Institutions such as the World Economic Forum have highlighted LLMs’ potential to revolutionize sectors like manufacturing by bridging knowledge gaps, organizing large-scale information systems, and enabling more intuitive machine-human collaboration—hallmarks of a modern, functional data commons [87].

Companies with extensive private datasets are increasingly adopting LLMs to maximize their data's utility. These firms leverage LLMs to process and analyze large volumes of text-based data, extracting valuable insights, automating customer interactions, and enhancing decision-making. By customizing LLMs with proprietary data, companies can develop solutions finely tuned to their specific needs and strategic objectives, providing a competitive advantage. For example, a financial institution might use an LLM to quickly interpret legal documents for quicker response and compliance.

A manufacturer might use an LLM to enhance their customer service and support operations. By integrating an LLM-powered chatbot hosted on a SaaS platform, the manufacturer could provide real-time, 24/7 support to customers across the globe. This chatbot would be capable of processing complex customer queries about product features, troubleshooting issues, maintenance advice, and warranty information. Leveraging the advanced natural language processing capabilities of an LLM, an ideal chatbot could deliver highly accurate, context-aware responses, significantly improving response times and reducing the workload on human customer service representatives. This proposed (but yet to be fully refined) application would not only enhance customer satisfaction by providing instant assistance but also streamline the manufacturer's support operations, making them more efficient and cost-effective [88].

LLMs might also be deployed to optimize manufacturing operations. On November 8, 2023, SymphonyAI announced an LLM for industrial systems, promising enhancements in manufacturing, reliability, and process optimization [89]. Accessible through the Microsoft Teams AI Library, this LLM utilizes a massive dataset of 1.2 billion tokens derived from over 3 trillion data points, including extensive machine tests and asset details. It is designed to boost operational efficiency and decision-making speeds, providing users with context-aware data and actionable insights for all levels of manufacturing operations. The LLM was trained on large proprietary industrial datasets. Developers can leverage its capabilities via an API or chatbot to create custom applications tailored to specific business needs [90].

For instance, developers can work with industry partners and use the LLM to independently handle queries about asset performance and reliability. It might be integrated into downstream systems to analyze a diverse array of data—including events, sensor data, asset details, work orders, warranty information, and more, relevant to the partner’s actual machines, processes, and operations. Capable of providing machine diagnostics, prescriptive recommendations, and answers to queries on specific fault conditions, test procedures, maintenance practices, manufacturing processes, and industrial standards, SymphonyAI’s Industrial LLM provides a resource on which to build and serves as a model to replicate for specific manufacturing needs, setting the stage for a new era of industrial applications that optimize data utilization for critical operations and decision-making.

7. Data as a Public Good

The concept of Data as a Public Good posits that data should be utilized for the greater good of society, a principle particularly resonant in global health and development circles [91] [92]. This approach has predominantly been propelled by public sector institutions committed to solving societal issues through altruistic means [93]. A notable proponent of this philosophy is the United Nations Global Pulse initiative, which has spent over a decade developing policies and infrastructures to support data sharing [94]. Styled as the Secretary-General’s Innovation Lab, it promotes "data philanthropy" and has spearheaded the Global Data Access Initiative (GDAI), later rebranded as Data Insights for Social and Humanitarian Action (DISHA) [94] [95]. Through this initiative, the UN collaborates with partners from the private sector (including McKinsey and Google), nonprofits (like the Jain Family Institute and the Patrick J. McGovern Foundation), and international public agencies (such as the World Food Program and the UN Development Programme) to harness proprietary data—from satellite imagery to mobile data—for significant social impact in alignment with the UN Sustainable Development Goals [95].

In 2013, UN Global Pulse partnered with mobile phone operator Orange, as well as entities such as universities and the World Economic Forum, to organize the first Data for Development Challenge [94] [96]. For this initiative, Orange provided 2.5 billion anonymized phone call and SMS records from 5 million customers in Côte d'Ivoire, which researchers from around the world used to explore various international development issues [97]. [Access was restricted to researchers from recognized institutions whose proposals met predefined criteria and who agreed to specific terms and conditions.] Since then, UN Global Pulse has partnered with companies like BBVA bank, Schneider Electric, and Waze to make their data accessible for research aimed at the UN Sustainable Development Goals [94].

Another use of Data as a Public Good occurs when companies are asked to contribute data relevant to evidence-based policymaking, such as workforce statistics or energy consumption details [98]. Sharing of such data can be facilitated via DaaS or SaaS platforms, ensuring confidentiality and privacy through the use of synthetic data or aggregate statistics to maintain individual privacy while still contributing valuable insights for public benefit.

In summary, this section categorized and explored the varied forms of data commons, defining them as collaborative efforts where stakeholders with diverse goals share and analyze data, regardless of the initiative's designation. We identified seven distinct types: Open Data, Data Consortia, Grand Challenges, Data Brokers, Data as a Service, Software as a Service, and Data as a Public Good. Each type of data commons offers unique operational characteristics, benefits, and challenges.

SMEs Benefit from All Seven Data Commons

While not every SME may utilize all seven types of data commons, having access to a variety of them is essential for remaining competitive and addressing key challenges through data, innovation, and AI. An AI-DC needs to provide all seven types of data commons.

Open Data enhances accessibility and fosters innovation, whereas Data Consortia promote resource pooling and industry standardization, although they may pose barriers to smaller entities. Grand Challenges can catalyze breakthrough innovations but come with privacy risks and uncertain outcomes. Data Brokers and Data as a Service deliver crucial business intelligence but must carefully manage data privacy and accuracy issues. Software as a Service provides efficient access to applications using shared data resources, enhancing operational efficiency. Data as a Public Good supports data sharing for societal benefits but faces significant privacy challenges. Together, these data commons types reveal the significant transformative potential and the complex challenges associated with managing and utilizing shared data across various sectors.

Figure 3 presents a comparison of the seven types of data commons—Open Data, Data Consortia, Grand Challenges, Data Brokers, Data as a Service, Software as a Service, and Data as a Public Good—focusing on their applicability to the needs of SMEs in terms of privacy, funding, innovation potential, and strategic advantages. Open Data, Grand Challenges, and Data as a Public Good are ways that SMEs can provide data to others, but do so with no promise of useful innovation in exchange because the focus is not on a problem specific to the data providers. A Data Consortium, Data Brokers, Data as a Service, and Software as a Service offer ways to share data to address specific problems of concern but the range of know-how may be limited to few experts. Given that SMEs often have limited investment capacity for IT resources and expertise, pooling these through Data as a Service and Software as a Service proves beneficial. Regardless of the data commons type, ensuring privacy and confidentiality in data sharing is a critical requirement for all SMEs.

Figure 3. Feature comparison of the seven types of data commons based on participation by SMEs.

Figure 3. Feature comparison of the seven types of data commons based on participation by SMEs.

Methods

The MyDataCan infrastructure

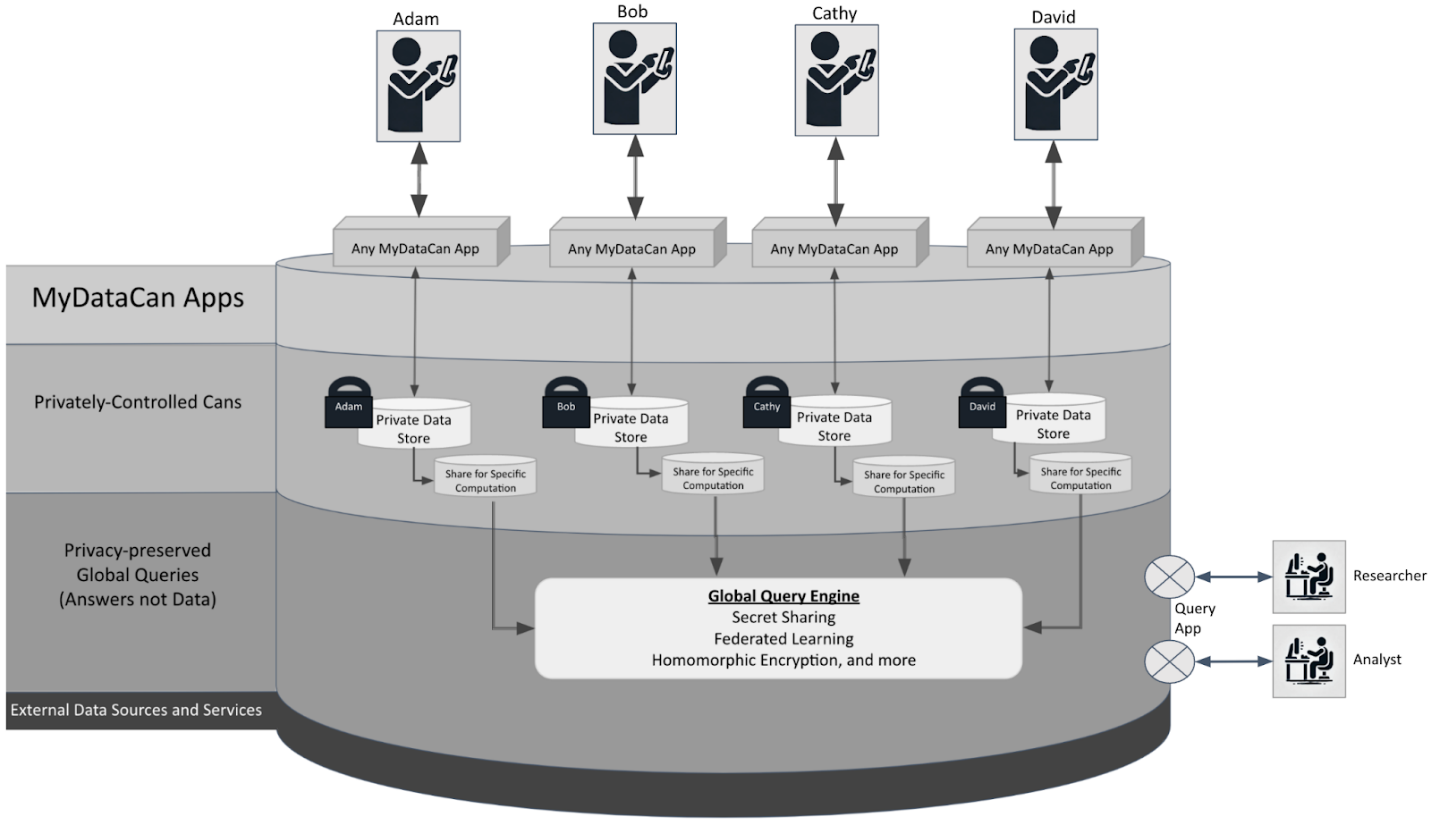

MyDataCan is a privacy-preserving digital infrastructure designed to empower individuals by giving them control over their personal data [18]. It allows users to securely store their data and selectively share it with trusted parties for specific purposes, leveraging cutting-edge encryption and privacy-preserving technologies to ensure data confidentiality and security. Created by co-author Latanya Sweeney in 2008, developed for personal health records in 2012 [99], and made professionally operational for privacy-preserving contact tracing during the 2020 pandemic [100], MyDataCan has evolved to support a wide range of applications, from healthcare to education, by enabling researchers and organizations to access vital data under strict user-controlled protocols in a secure cloud environment. This platform facilitates responsible data use, enhancing individual privacy and promoting transparency and collaboration in data-driven applications.

MyDataCan empowers individuals to take full control over their data. Developers can create apps that operate on the MyDataCan platform, where users engage with these apps as they would any other, yet with a significant difference: Datasets shared with these apps are securely stored in a "can" controlled exclusively by the user. This allows individuals to manage how their datasets are utilized across different applications. Over time, we anticipate that this user-controlled data will become more accurate and comprehensive than information gathered by other means, such as through data brokers. Consequently, entities seeking information about an individual will need to obtain their consent to access specific data from these private storage "cans." Individuals can also grant consent to share subsets of their data to contribute to privacy-preserving global and aggregate queries and datasets. MyDataCan leverages the latest advancements in data privacy and security to ensure robust access control and data management within its platform.

For manufacturing SMEs, this infrastructure provides a hypothetical environment where companies could control their data within private, secure data silos on MyDataCan. These datasets are encrypted thoroughly, ensuring they remain invisible even to the platform provider. SMEs can choose to make their data accessible for broader analysis on an opt-in, case-by-case basis, enhancing opportunities for insight. For instance, they might participate in secure multi-party computations across data from multiple manufacturers or merge their data with others’ on MyDataCan to create aggregated, privacy-protected datasets. These collaborative analyses are performed without actual data transfer, utilizing advanced privacy-preserving technologies such as secure multiparty computation protocols, zero-knowledge proofs, homomorphic encryption, and federated learning. Additionally, virtual machines used in these processes automatically create, perform their analysis, reporting results and then destroying themselves to further safeguard confidentiality.

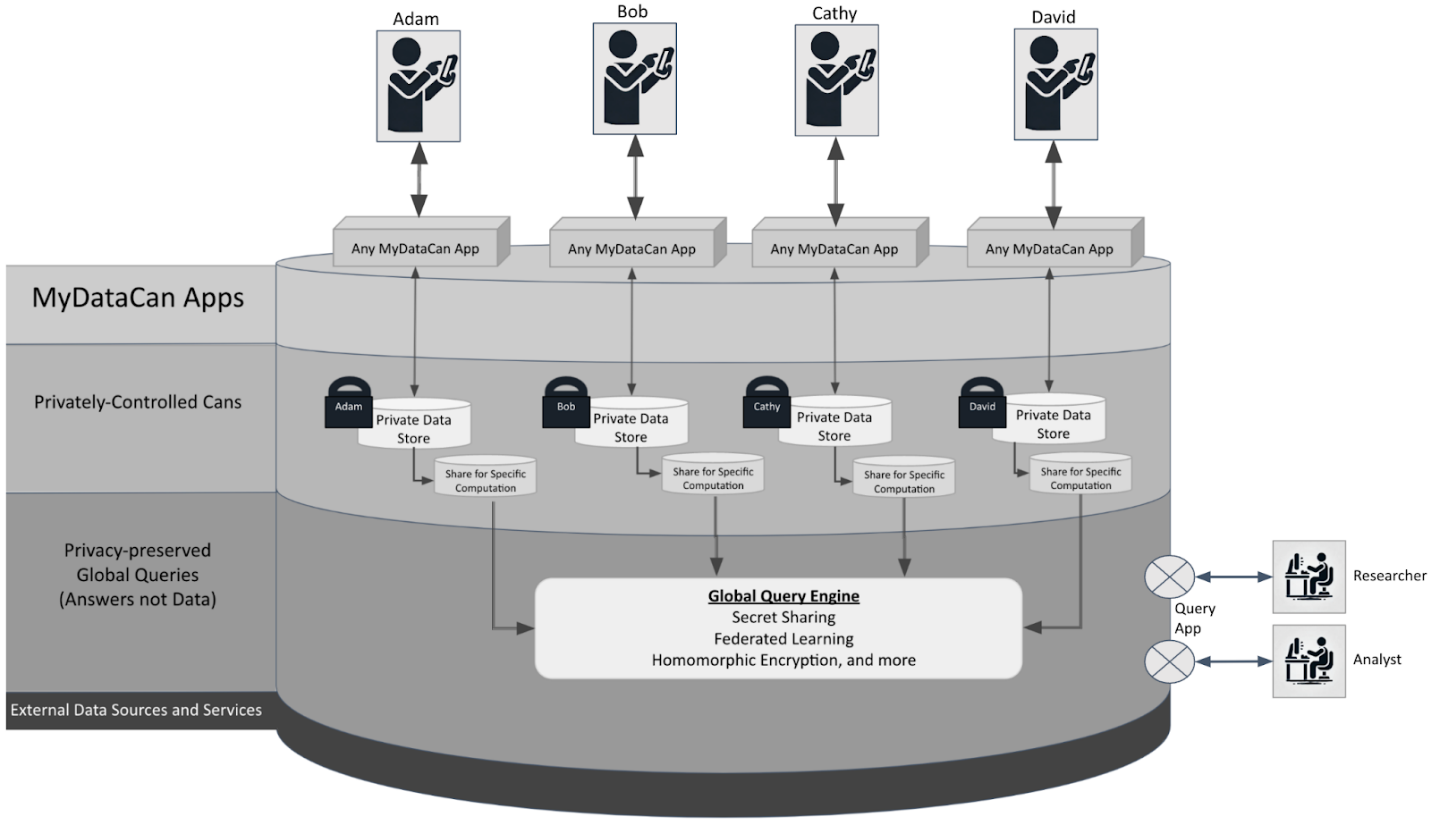

Figure 4 illustrates the functional architecture of MyDataCan. Users interact with apps and web services as usual, with the distinction that these services, once tethered to MyDataCan, store a copy of each user's personal data in the user’s private storage ("can") on the platform, as shown in the Users, MyDataCan, and Privately Controlled Cans layers in Figure 4. Each dataset remains under the user's control, encrypted so that even MyDataCan providers cannot gain access, as indicated by the personal locks in Figure 4.

Users have the flexibility to authorize the sharing of their data for specific purposes, employing a permission system that details the privacy level [101], intended use, and other handling requirements. This is depicted in the Share for Specific Computation buckets for each user in Figure 4. Approved researchers and analysts can access responses to queries or anonymized data consistent with the permissions granted.

Figure 4. Functional diagram of the MyDataCan infrastructure showing layers from top to bottom: individual users, MyDataCan apps, privately controlled cans, privacy-preserved global queries, and external data sources and services. At right, researchers and analysts access query-enabled data privacy–preserved aggregations based on the information individuals have agreed to share with the query.

MyDataCan’s global queries layer leverages decades of advances in data privacy, cryptography, and computer security to combine and share datasets with scientific guarantees of privacy and confidentiality. This includes:

- using statistical and data redaction methods to render datasets provably anonymous (e.g., [102] [103] and see example in Figure 6);

- employing multiparty computation protocols that allow secure computers to compute aggregate values without disclosing individual data points (e.g., [104] [105] [106] and see example in Figure 8);

- utilizing federated learning to enable decentralized computers to collaboratively learn a shared prediction model while keeping all training data local (e.g., [107] and see example in Figure 16);

- implementing homomorphic encryption to perform computations on encrypted data with results matching those from unencrypted data without revealing the original data (e.g., [108] [109] and see examples in the upcoming Results Section);

- conducting surveys, with anonymous but verifiable contributions or explicitly identified contributions (see example in Figure 1); and,

- employing data tags to specify and enforce security and access requirements for sensitive data across the technological infrastructure [101] and see example in Figure 12).

Additionally, external data and services can be imported into MyDataCan or made available through APIs, as seen in the bottom layer of Figure 4, to enhance the scope of global queries and analyses. The Global Query Engine in the MyDataCan framework supports a range of privacy-preserving operations, including anonymous matching, aggregate computations, and synthetic data generation, all conducted with rigorous privacy and confidentiality protections.

MyDataCan uses a datatags system for managing sensitive data. Specific security and access requirements are assigned to each dataset using a set of well-defined color-named tags [101]. This system dramatically simplifies the navigation of thousands of data-sharing regulations, transforming them into a manageable number of clear categories. Each datatag delineates the handling, security features, and access protocols required for the data it labels, ensuring that data can be shared appropriately based on its sensitivity. MyDataCan supports a range of security measures from basic to high-security requirements for both storage and transmission to safeguard the data against unauthorized access. By facilitating a structured way to meet legal and ethical standards, MyDataCan enhances the reliability and safety of data sharing, making it easier for businesses to comply with regulatory requirements while maintaining the confidentiality and integrity of the data they handle.

Study Design

In this paper we customize the MyDataCan platform to function within an ecosystem that supports the seven types of data commons, creating an AI-DC environment tailored for SMEs. This configuration enables effective data sharing and innovation, empowering SMEs to address their unique challenges with customized, data-driven solutions. By enhancing the platform’s capabilities, we promote industry-wide collaboration with a commitment to robust scientific guarantees of privacy and confidentiality. The Results section of this paper examines the specific features and operational aspects of each data commons type within a MyDataCan-powered AI-DC implementation.

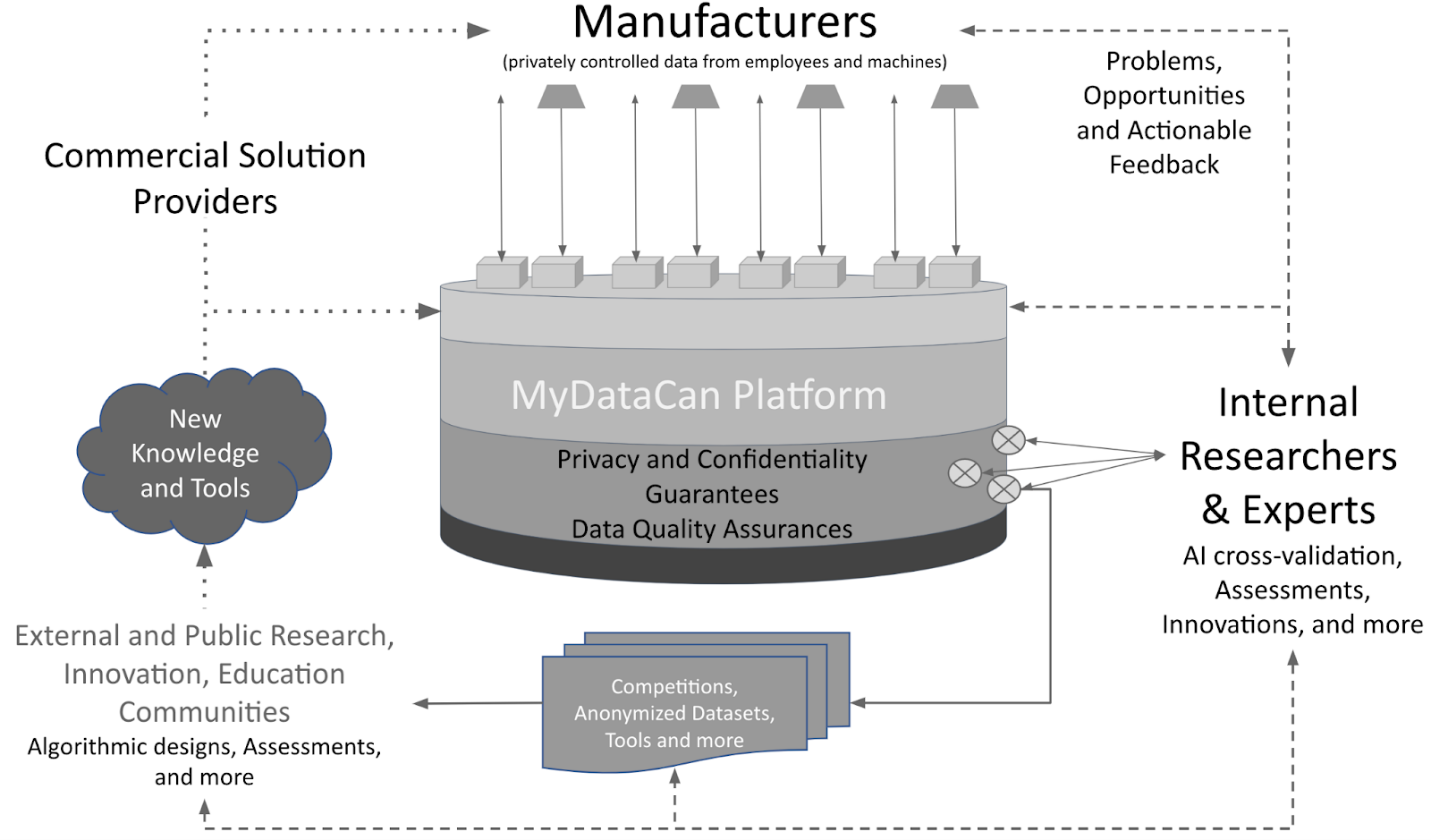

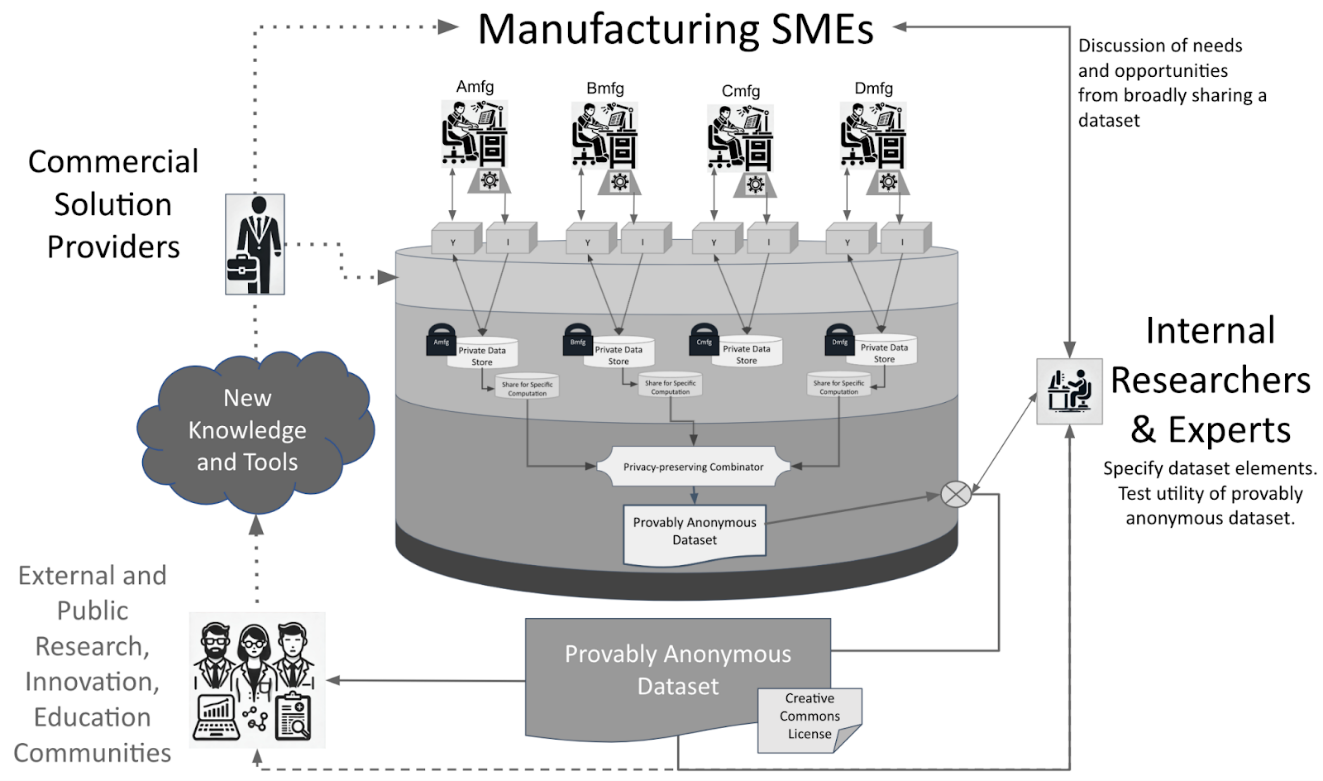

Figure 1 introduced an AI-DC ecosystem. Figure 5 illustrates the implementation of an AI-DC Ecosystem utilizing MyDataCan as the central infrastructure for securely storing, analyzing, and sharing data under stringent privacy and confidentiality guarantees. This infrastructure will be generally referred to as the “MyDataCan AI-DC.” This paper will focus on small and medium-sized manufacturers as a case study, although the principles and applications discussed are broadly relevant to other SMEs as well.

Manufacturers input data through apps linked to MyDataCan, and machines and process tools directly upload data to individual manufacturers’ private silos. As shown in Figure 1, manufacturers face specific challenges and utilize their data along with shared resources to seek solutions from collaborating researchers and experts. Figure 5 highlights these communication flows between manufacturers and researchers, facilitated by MyDataCan’s query layer, which ensures appropriate access and privacy controls for data shared with approved researchers and analysts. In return, these researchers and analysts provide manufacturers with actionable insights and may also develop new tools, which become available as apps on MyDataCan for manufacturers’ use.

Manufacturers have the option to pool their data to create joint datasets for competitions or broader sharing with the research and innovation communities—including educational institutions, startups, and think tanks. This is depicted in Figure 5 through the use of the MyDataCan query layer, which generates privacy-preserving datasets from consenting manufacturers. The outcomes are then made available more widely, fostering further research and development.

Figure 5. Depiction of an AI Data Community (AI-DC) ecosystem powered by MyDataCan, referred to as a MyDataCan AI-DC. The ecosystem includes manufacturers, internal researchers and experts, and commercial solution providers and interacts with communities of research, innovation and education.

Figure 5 not only details the interactions within the AI-DC ecosystem but also emphasizes the continuous cycle of feedback and innovation. New tools and knowledge are disseminated to manufacturers with necessary support and training from commercial solution providers. New applications on the MyDataCan platform equip manufacturers to independently manage and analyze their data within the secure environment of MyDataCan. This figure captures the essence of the dynamic AI-DC ecosystem as initially described in Figure 1, showcasing the practical application and collaborative nature of this model.

Not depicted in Figure 5, but integral to the AI-DC ecosystem facilitated by MyDataCan, is the capability to integrate external datasets and services into MyDataCan's query engine. This functionality allows manufacturers to access and utilize additional datasets or APIs to datasets or services. They can combine these resources with their privately held data within the secure environment of MyDataCan, ensuring that their private information remains confidential and unexposed.

Results

The feasibility and benefits of implementing each of the seven data commons models—Open Data, Data Consortium, Grand Challenges, Data Brokers, Data as a Service, Software as a Service, and Data as a Public Good—using a MyDataCan AI-DC are thoroughly evaluated in the following subsections. This examination compares each data commons model to its implementation in a MyDataCan AI-DC and identifies any additional advantages provided or new concerns introduced.

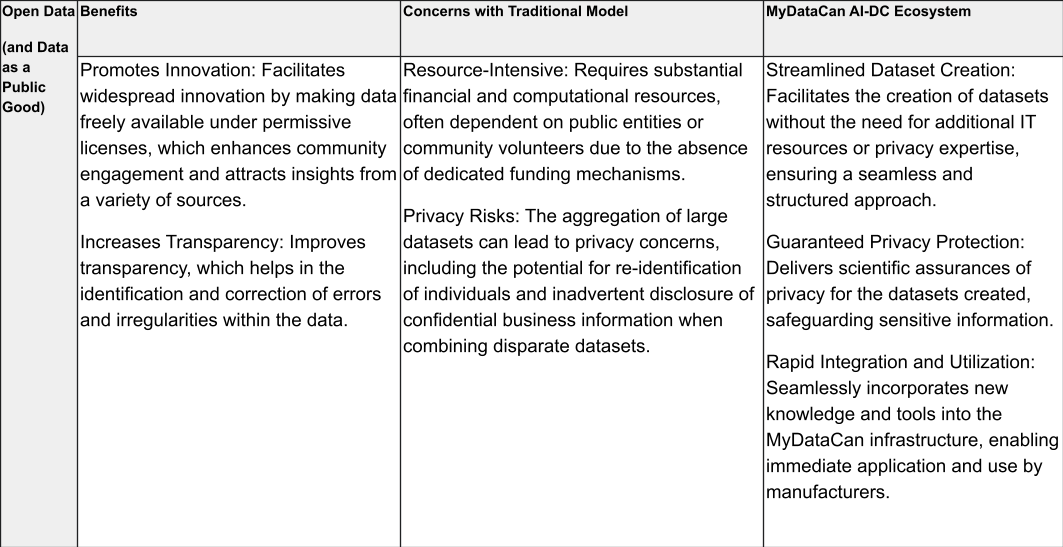

1. Open Data

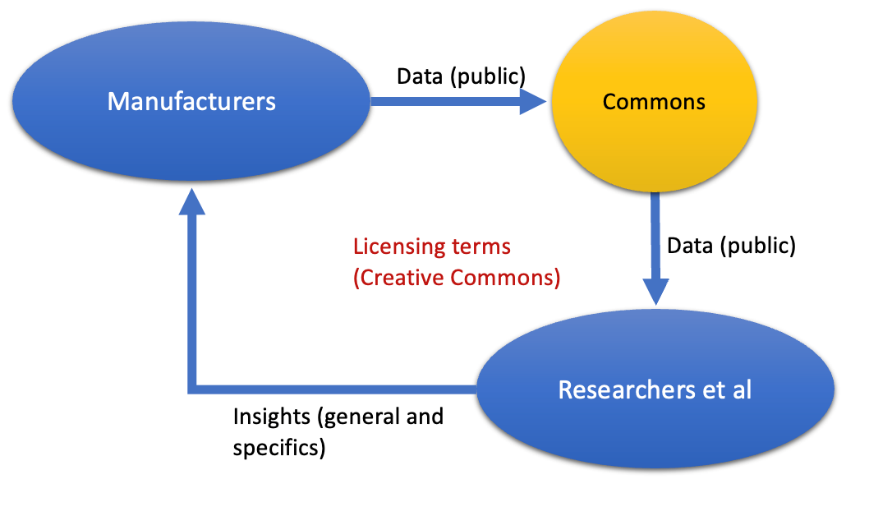

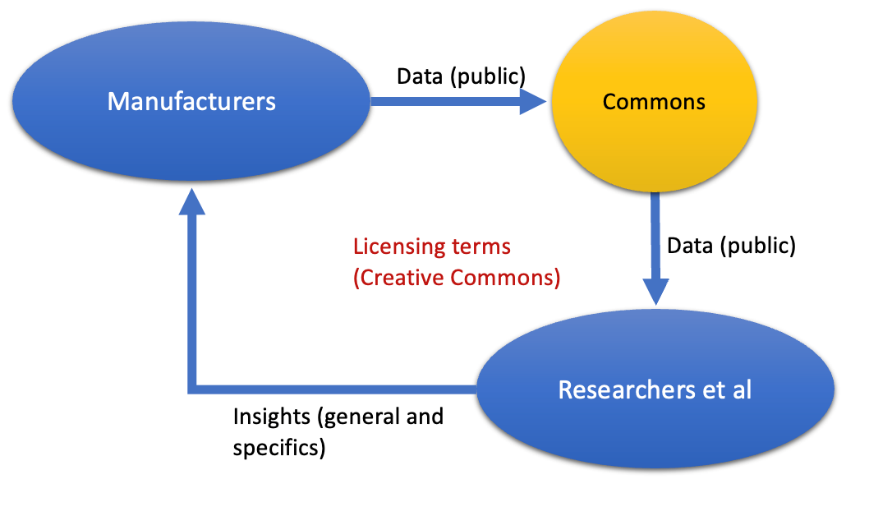

The Open Data model strives to maximize data accessibility with minimal usage restrictions, typically governed by Creative Commons licenses. These licenses enable data owners to maintain copyright of created content while allowing extensive utilization of their data (refer to Figure 6a) [110]. By offering data under highly permissive terms, Open Data ensures availability to a wide array of stakeholders. This approach promotes innovation by encouraging broad community engagement, increasing the likelihood of generating insights from diverse and unexpected sources [111]. Additionally, the model enhances transparency, which in turn facilitates the identification and correction of data anomalies and errors.

For instance, Wikidata operates under a public domain license, encouraging unrestricted reuse and enhancement of data [112] [113]. Similarly, platforms like the World Bank and Data.gov utilize licenses that facilitate open access to their data, promoting transparency and innovation by enabling anyone to contribute to or benefit from the data without complex legal barriers [114] [115] [116].

The Open Data model, while beneficial, presents several challenges for SMEs, especially in manufacturing, due to potential IT resource constraints required for effectively analyzing and processing the data, and concerns regarding the confidentiality of shared internal data. Aggregating and potentially further processing vast amounts of data from disparate sources demands substantial financial and computational resources. Since the Open Data model lacks a built-in fundraising mechanism, it depends on the willingness of entities to support Open Data initiatives as a public service. Consequently, many significant projects are either managed by large public entities or maintained by communities of volunteers. Furthermore, aggregating vast amounts of data in a publicly and freely accessible setting can introduce additional privacy issues.

Given these challenges, the Open Data model does not suffice in the context of SMEs. However, by implementing Open Data within a MyDataCan powered AI-DC, manufacturers can collaboratively create privacy-preserving datasets without needing extensive internal expertise. This integration enables the practical application of insights through MyDataCan, supported by commercial providers or engineers, thus addressing the direct needs of participating manufacturers.

Figure 6 depicts two distinct frameworks: (a) the Generic Open Data Model and (b) a MyDataCan AI-DC Ecosystem for generating and sharing datasets under a Creative Commons License. Figure 6a illustrates the traditional Open Data Model, which encompasses IT and data production costs borne by manufacturers and potential privacy concerns associated with the data. In contrast, Figure 6b showcases a MyDataCan AI-DC Ecosystem where such costs and privacy concerns are effectively mitigated. In this ecosystem, datasets are shared widely, eliminating traditional cost and privacy barriers, leveraging the structured and secure environment provided by MyDataCan.

Figure 6b depicts manufacturers using a MyDataCan AI-DC to reap the benefits of an open data engagement. Four manufacturing SMEs (Amfg, Bmfg, Cmfg, and Dmfg) continuously upload data from their operations to MyDataCan using app I. This data, along with other information, is visualized and analyzed within their private storage silos through app Y. During a collaborative workshop, these manufacturers discuss with internal researchers and experts the potential of creating a shared dataset from their machine data, aiming to foster the development of new tools. Amfg, Bmfg, and Dmfg agree to merge their data, ensuring robust privacy measures are in place to protect worker information and maintain customer confidentiality. The resulting dataset, confirmed as provably anonymous and still valuable for its intended use, is openly shared with external communities under a Creative Commons License. Once it is shared, others create innovative tools, one of which contributes meaningfully to manufacturers, so it is subsequently developed into a new app on the MyDataCan platform. An affiliated commercial provider offers training and support to SME manufacturers that choose to use this new tool. This example demonstrates how the AI-DC ecosystem and technological infrastructure support manufacturing SMEs using an Open Data model.

In summary, while the Open Data model supports extensive data sharing and innovation, it demands careful consideration of resource allocation and privacy risks. For manufacturing SMEs, utilizing this model through a MyDataCan AI-DC can mitigate these challenges, offering a structured approach to leverage Open Data effectively while ensuring data confidentiality and usability.

| (a) |

| (b) |

Figure 6: Comparison of Open Data models to produce and facilitate wide data sharing for a dataset under a Creative Commons license: (a) The Generic Open Data Model, which includes IT and data production costs borne by SME manufacturers that organize themselves collectively and entails potential privacy concerns; and (b) a MyDataCan AI-DC Ecosystem, demonstrating how these costs and privacy concerns are mitigated by automated tools and processing.

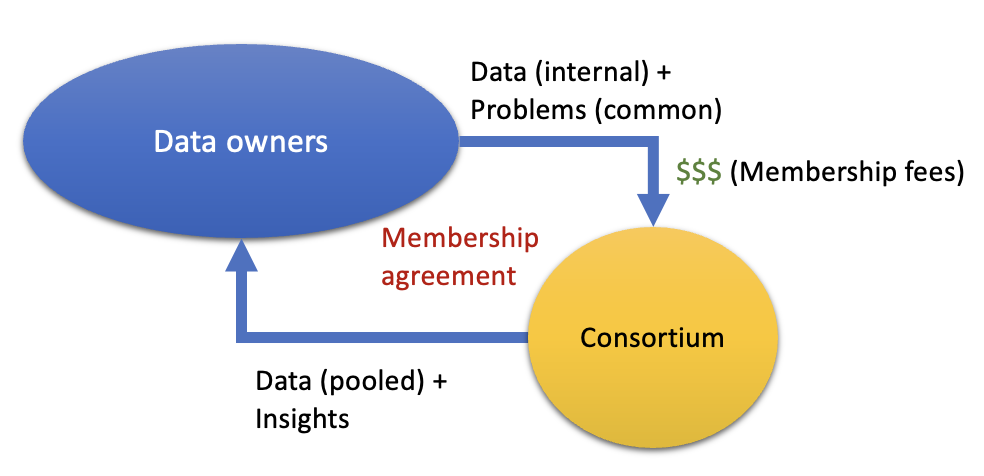

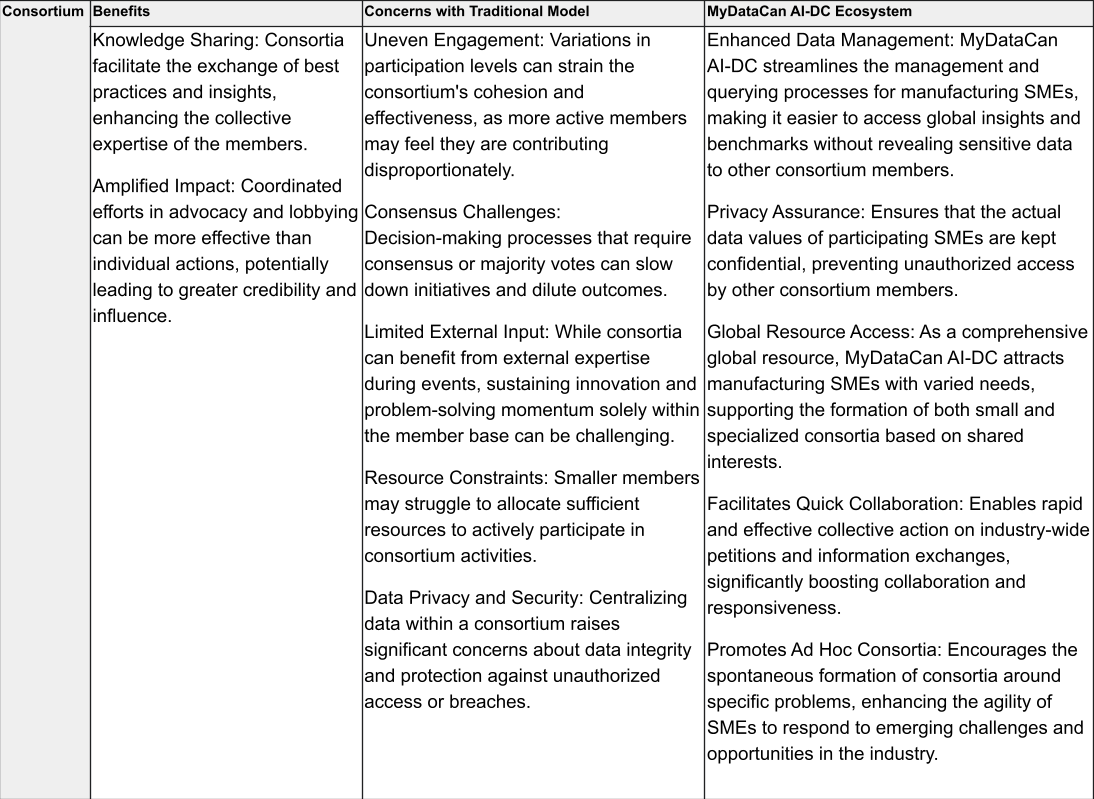

2. Data Consortium

In a consortium, a collaborative alliance of multiple organizations agrees to pool resources or engage in joint activities to achieve common goals. These entities can vary significantly in structure and formality, ranging from relatively loose associations of member organizations that come together under a contractual agreement but without forming a separate legal entity to organizations with a separate legal identity [117] [118].

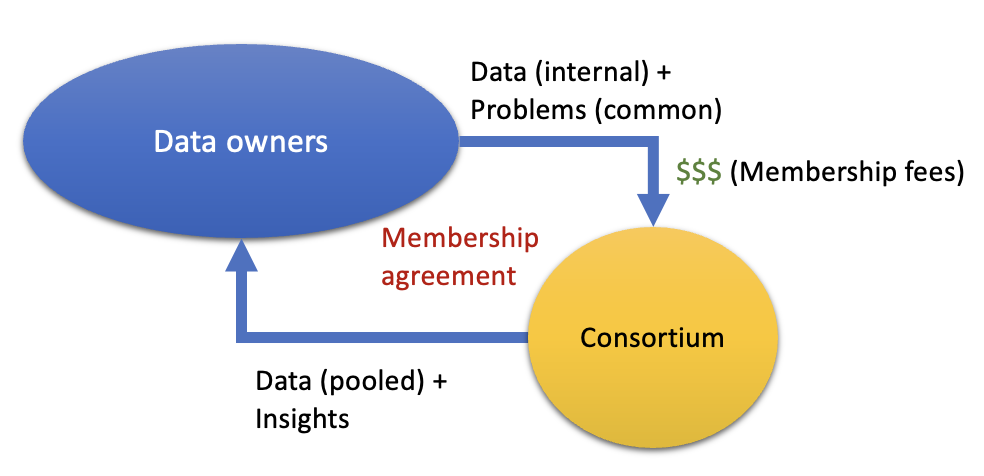

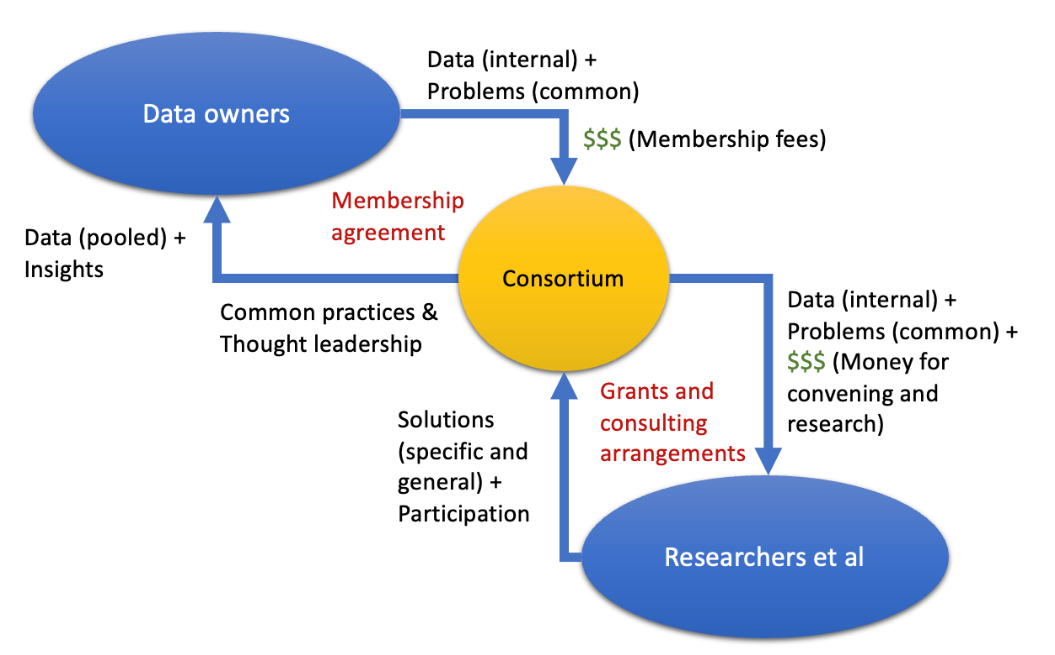

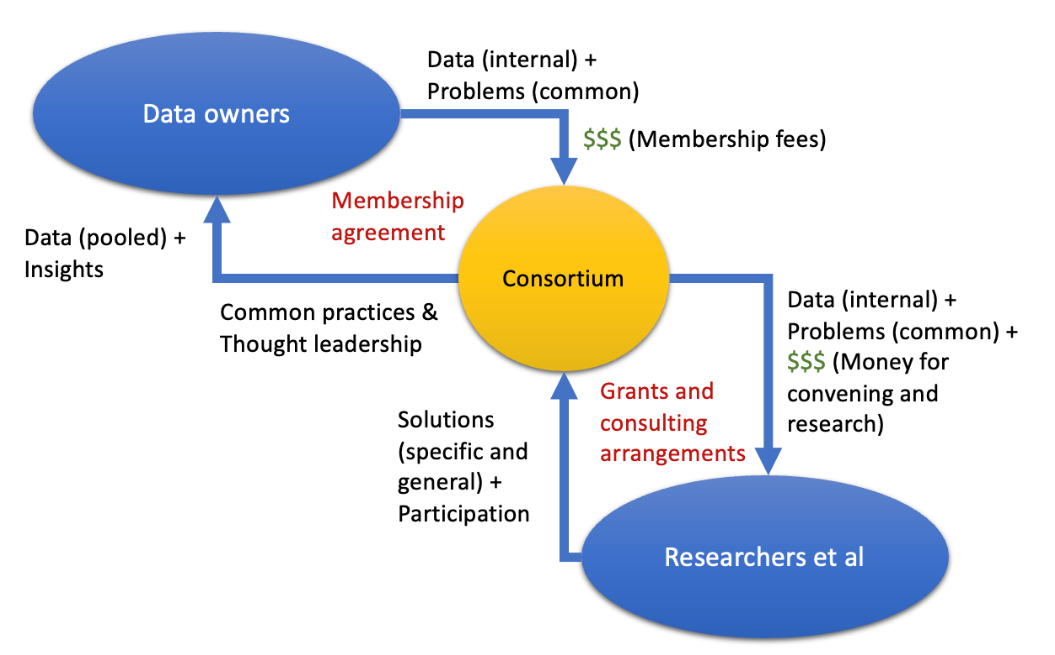

Certain consortia are more concerned with inward-facing activities such as sharing data, while others focus on outward-facing activities such as developing new infrastructure for data sharing, advocating for a change in sharing policies, or disseminating lessons learned. Others engage in a mix of activities (Figure 7) [119] [120] [121] [122].

| (a) |

| (b) |

Figure 7. Two generic consortium models based on (a) inward-facing or (b) external facing objectives.

Inward-facing consortia primarily focus on internal data sharing to address specific challenges faced by their members (Figure 7a). By pooling data, members can identify patterns and derive insights that are not discernible when working with isolated datasets. The work is carried out by members independently or by consortium employees [37]. This data pooling is often governed by agreements that specify the conditions under which data can be accessed and shared among members [37], with access to other participants’ data often being conditional on an expectation [38] or requirement to share one’s own data [41] [42].

Outward-facing consortia aim to influence broader industry standards and frameworks through activities such as policy advocacy, infrastructure development, and public dissemination of findings (Figure 7b). Membership fees typically fund activities like workshops that explore potential solutions and foster thought leadership.

There are many highly effective industry consortia that have made lasting contributions to innovation and standardization. For example, the Semiconductor Manufacturing Technology Consortium (SEMATECH), formed in 1987, united U.S. semiconductor companies with the federal government to bolster domestic competitiveness in chip manufacturing. SEMATECH played a pivotal role in advancing standardized processes and driving innovation, significantly enhancing U.S. semiconductor production capabilities [123]. Similarly, the World Wide Web Consortium (W3C), established in 1994, has been critical in developing and maintaining open web standards such as HTML, CSS, and XML. Its collaborative, international model has ensured the web remains a robust, interoperable platform for global communication and commerce [124].

For SMEs in manufacturing, the data consortium model presents additional opportunities and obstacles. While pooling data can provide valuable insights into operational efficiencies and process improvements, SMEs must be cautious about sharing sensitive information that could compromise their competitive edge or reveal vulnerabilities. The reluctance to share detailed data can hinder the full realization of the consortium's potential benefits.

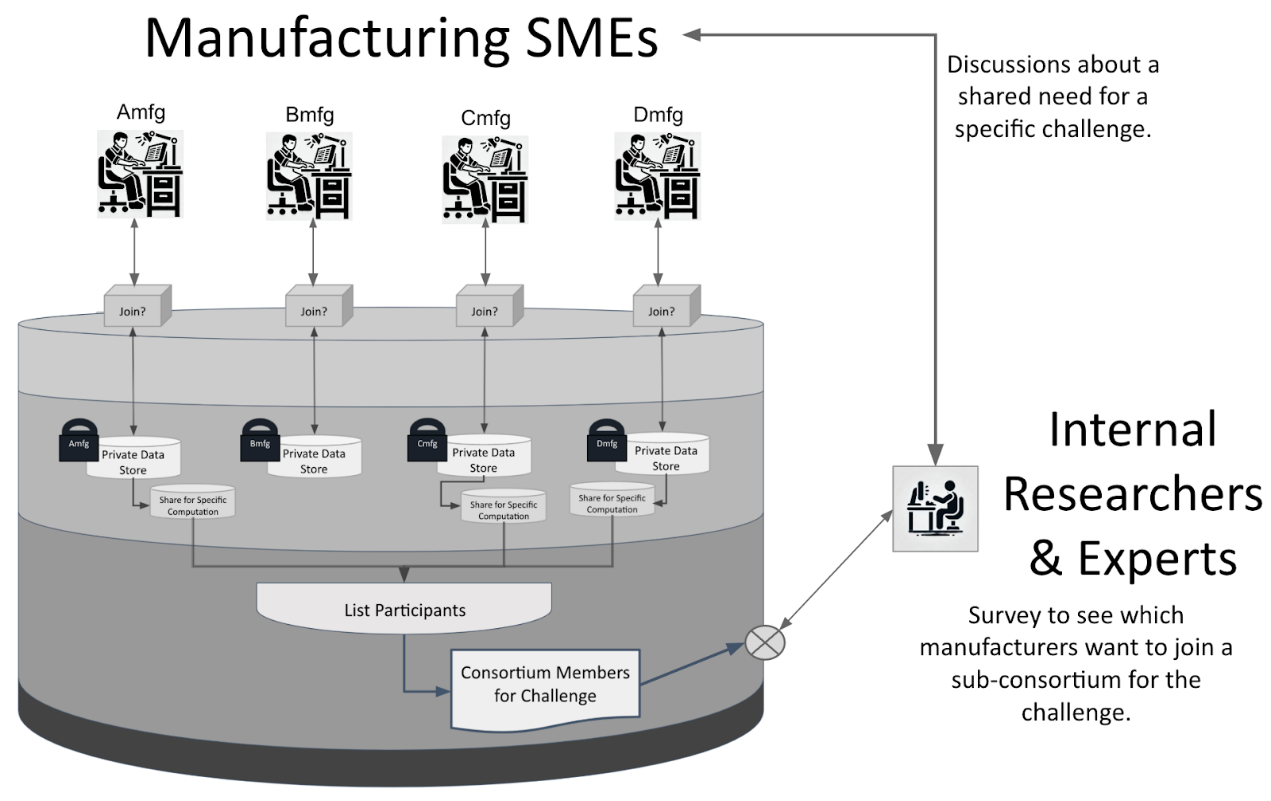

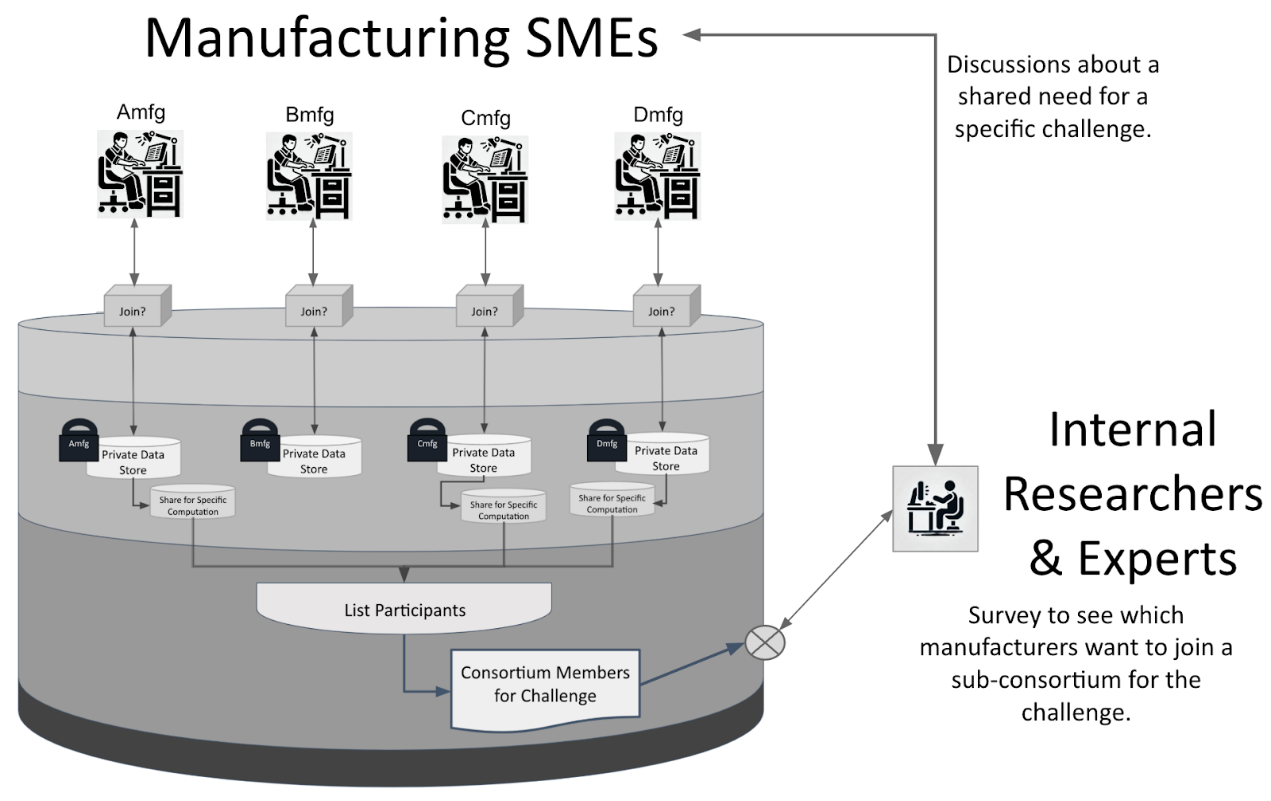

A MyDataCan AI-DC offers significant advantages for inward-facing consortia, particularly for manufacturing SMEs. It simplifies the management, querying, and acquisition of global insights and benchmarks from shared data while ensuring that actual data values remain concealed from other participants. Additionally, as a global resource, a MyDataCan AI-DC attracts manufacturing SMEs for various purposes, and then facilitates the formation of small and ad hoc consortia around common issues. This enables swift collective actions on industry petitions, information sharing, and data exchange, enhancing collaboration and responsiveness within different consortia.

Consortia are often formed to calculate aggregate industry or regional statistics that serve as benchmarks for individual companies. For instance, as illustrated in Figure 8, a group of manufacturing SMEs utilizes a MyDataCan AI-DC to compute gender wage gap statistics within their workforce using a provably secure protocol, more formally referred to as a secret-sharing multiparty computation technique [104], which among other notable uses [106] was previously utilized in Boston to analyze gender wage gaps among companies [105].

Overview of Operation: Each manufacturer submits data—job position, salary, and employee gender—into their designated private storage silos within the MyDataCan platform, adhering to a sharing agreement that preserves the confidentiality of individual data entries while enabling the computation of collective averages (see Figure 8a).

Protocol Execution:

- Initialization: Secure virtual machines (VMs) are created in the cloud for each participating manufacturer. Each VM is allocated exclusively to a manufacturer, ensuring that all private salary information remains confined to its respective VM (Figure 8b).

- Data Distribution: The protocol has each VM disperse fragments of salary information across the other VMs, ensuring that no single VM receives a complete salary value or its dataset by keeping some fragments private and not shared (Figure 8c).

- Data Aggregation: Each VM combines the fragments it received from others with the fragments it did not share. This combined dataset is then sent to a centralized reporting VM, but without revealing the originating sources of each fragment or its complete original data (Figure 8d).

- Reporting: The reporting VM calculates final aggregate statistics from the combined fragments and outputs the results. This stage concludes the protocol, ensuring that the aggregate statistics are accurately derived without exposing the privacy of individual salaries or company-specific information (Figure 8e).

This multi-stage protocol leverages secure multiparty computation techniques to ensure that even though the individual contributions are obscured, the resultant aggregate statistics are accurate and informative, thus fulfilling the consortium's benchmarking objectives without compromising privacy. This protocol is an example of the many different ways to provide meaningful information to manufacturing SMEs while upholding stringent privacy and confidentiality standards.

In summary, while consortia offer a platform for collaboration and shared problem-solving, the effectiveness of such models depends on the alignment of member interests, the robustness of data-sharing agreements, and the ability to maintain an equitable and active participation across all members. For manufacturing SMEs, careful consideration of the risks and rewards of participating in a data consortium is essential to determine its suitability.

Figure 8. Gender Wage Gap Analysis in Manufacturing SMEs. (a) Manufacturers initialize with sensitive data, (b) virtual machines are configured, (c) data fragments are shared securely, (d) fragments are combined by a central machine, which (e) computes aggregate statistics, after which (f) results are distributed to manufacturers and potentially externally.

3. Grand Challenges

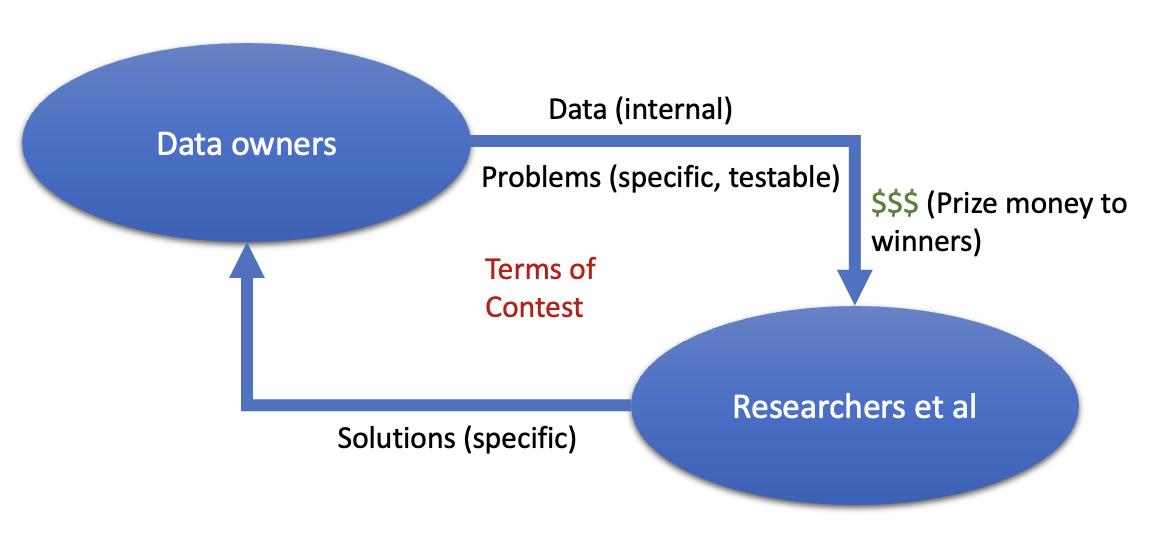

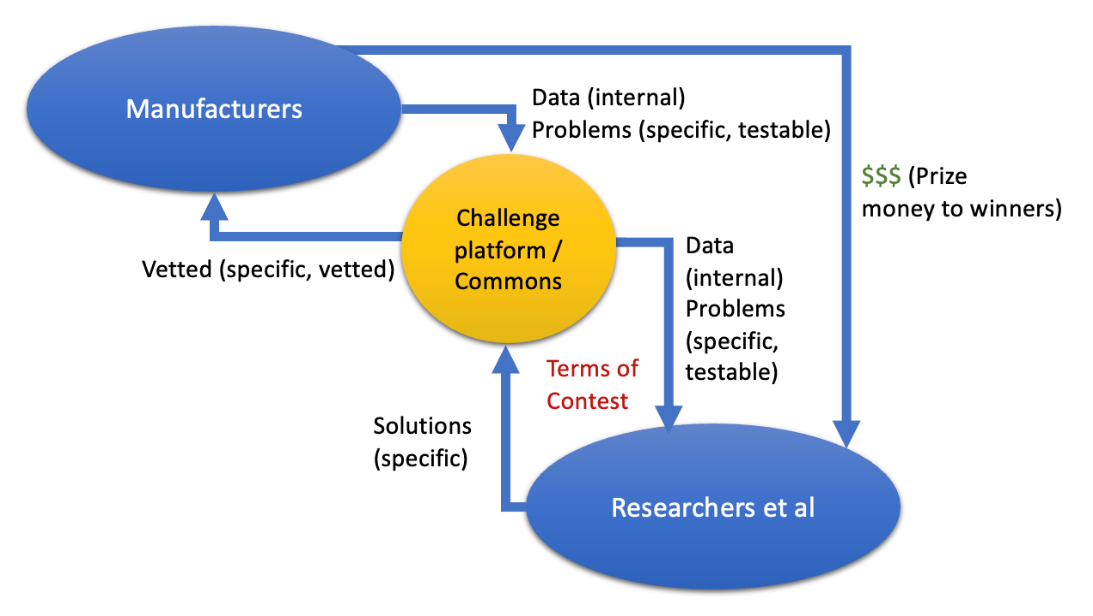

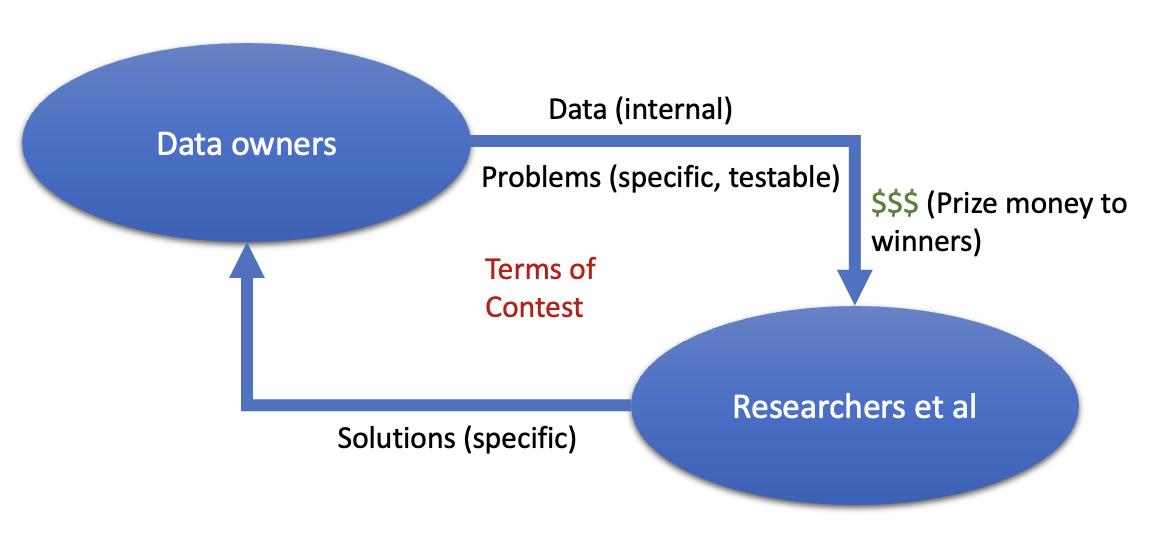

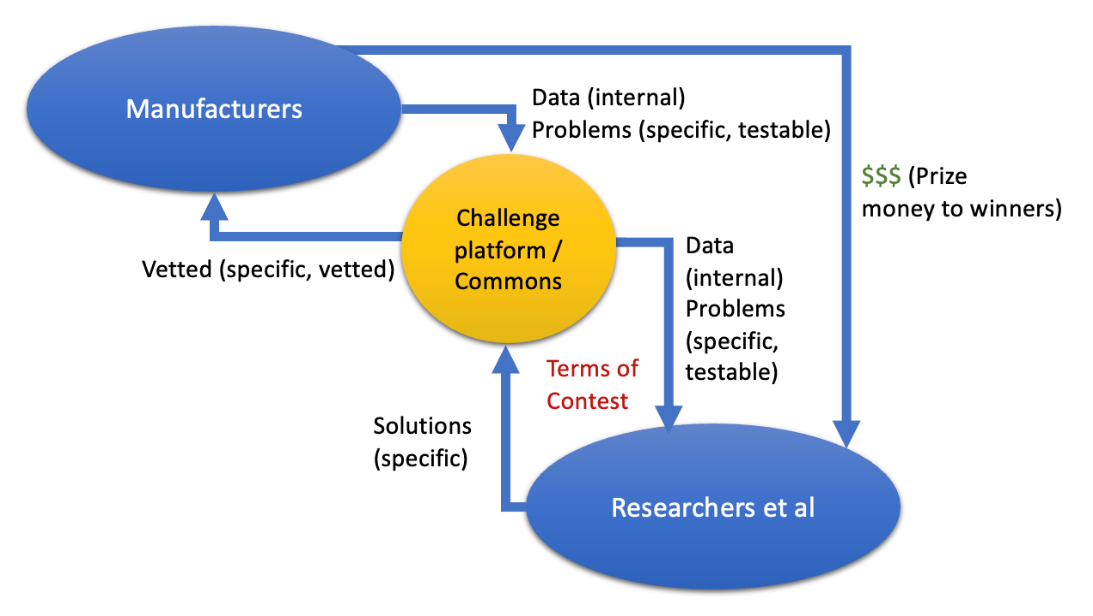

Grand Challenges involve organizations specifying a problem they want solved, providing necessary data, and offering prizes for the best solutions. This model incentivizes innovation by rewarding future achievements rather than past ones [125] and fosters a competitive environment. Participants, such as researchers, agree to terms of use for data and understand the interest of the sponsoring organizations’ ownership conditions of their solutions. To support collaborative problem-solving, organizers often provide forums for participant interaction and mandate that interim solutions be published [126] [127] [58] [50].

Large organizations may directly host challenges, where they create the dataset, set the terms, and promote the contest to benefit their own goals (Figure 9a). Platforms like Kaggle facilitate the hosting and management of challenges, making them accessible to a broader range of organizations (Figure 9b). Additionally, these platforms can aid in the design and planning of challenges, functioning similarly to innovation consultancies like Nesta [128].

| (a) |

| (b) |

Figure 9. Two Models for Grand Challenges in which contest creation and management are conducted by (a) the originating data holders or (b) facilitated through a dedicated platform for hoisting grand challenges.

Grand Challenges offer several benefits. They foster community engagement around specific issues, increasing the chances of sustained advancements [129]. These contests appeal to participants by offering monetary rewards, recognition, and compelling challenges, promoting the development of solutions that benefit both organizers and participants. Organizers incur limited financial risk, disbursing rewards only when successful solutions are identified, allowing them to profoundly shape industry standards and practices.

Grand Challenges come with certain drawbacks. Firstly, challenges must be explicitly defined with realistic success criteria; overly ambitious or vague criteria may discourage participation or yield impractical solutions. There are also risks associated with data availability, including potential privacy breaches or the re-identification of supposedly anonymous data, as evidenced by the Heritage Health Prize and Netflix Prize incidents. Moreover, even though rewards are only issued for successful solutions, organizers still face inherent fixed costs for designing the challenge, preparing data, engaging participants, and evaluating solutions.

For manufacturing SMEs, the Grand Challenge model poses unique risks, including inadvertently revealing strategic internal issues to competitors. Manufacturing datasets often require iterative problem-solving that doesn't align well with the fixed structure of Grand Challenges, which assumes that a particular manifestation of a problem will persist through the creation and implementation of the challenge. The public disclosure of sensitive business information could disadvantage SMEs competitively, particularly if larger firms exploit this openness. Therefore, while Grand Challenges can drive innovation and collaboration, without careful safeguards they may not be the most suitable model for the nuanced and confidential needs of manufacturing SMEs.

Grand Challenges also present significant opportunities for manufacturing SMEs by driving innovation to address their specific challenges. Here are ten potential Grand Challenges that could be particularly beneficial for manufacturing SMEs.

- Predictive Maintenance Modeling: Develop AI-driven predictive models that use sensor and machine data to predict maintenance needs, reducing downtime and extending equipment life.

- Supply Chain Optimization: Create a challenge to develop algorithms that optimize supply chain logistics based on real-time data, helping SMEs minimize costs and improve efficiency.

- Energy Consumption Reduction: Design a challenge to create systems that analyze and optimize energy use across manufacturing processes, aiming to reduce costs and carbon footprints.

- Quality Control Automation: Use AI to develop automated quality control systems that analyze product data during manufacturing to detect defects early in the process.

- Workforce Optimization: Develop models that analyze workforce data to optimize task assignments and productivity, ensuring efficient use of human talent.

- Customization and Personalization: Create software solutions that leverage customer data to customize and personalize products in real time during manufacturing.

- Waste Reduction: Challenge participants to develop systems that analyze production data to identify waste and inefficiencies, promoting sustainable manufacturing practices.

- Safety Enhancement: Use data from sensors and IoT devices to develop systems that predict and prevent workplace accidents and improve overall safety conditions.

- Market Demand Prediction: Develop predictive analytics tools that use market data and trends to forecast product demand, helping SMEs adjust production schedules and inventory.

- Real-Time Tracking and Reporting: Create a challenge for the development of real-time tracking systems that integrate with existing manufacturing systems to provide live feedback and analytics on production status, helping manufacturers make quicker decisions.